ENGL 3130: Business Writing

Professor:If you've never met a professor you might wonder what we do. We do research, write grant applications, write articles and books, review colleagues' work, give public presentations, participate in university governance, and teach (distill hundreds of books and articles into syllabi, create quizzes and tests, assign papers, offer feedback, grade, write letters of recommendation, design new classes). Most of the professors you will meet care deeply about teaching. They just wish they had more time for it. Remember there's a difference between learning and being entertained. Remember also that you don't have to like someone to learn from them. Dr. George PullmanOffice address: 2424, 25 Park Place

Phone: 404 - 413 - 5458When you do call someone, have a voice message prepared in advance. No more than 10 seconds. Speak slowly. Just say who you are, what you want, how to reach you. Repeat your number at the end, s-l-o-w-l-y. If you need more than 10 seconds, use email or face-to-face. I never use the phone, so with me, go straight to email.

Office Hours: I am available via gpullman@gsu.eduWhen emailing a professor, the subject line should be class number: day, time. Like this, for example, ENGL 3130: TR, 8:00. That way you don't have to introduce or explain yourself. The proper salutation is, 'Professor Lastname.' Don't use txt spk. Use complete sentences and standard punctuation. Don't ask questions Google answers. Don't ask, 'What did I miss?' (unless you want to antagonize your professor). Sign off with Thank you, and your name. Don't expect an immediate response. Many professors answer email only during a set time, say between 4 and 5 pm. I'm compulsively responsive to email but tend to maintain radio silence between 10 pm and 5 am..

I am early to class and frequently stay late, if you want to speak face-to-faceDon't be anonymous. Go to your professors' office hours at least once; early on is best. (Think of a smart question first: why did you get interested in SUBJECT HERE? What do SUBJECT majors do when they graduate? Most people, not just profs., like to talk about themselves, especially indirectly). Generally speaking, if you behave as though you take your learning seriously, a prof. will take you seriously. Otherwise, you are just a face in a sea of ever-changing faces..

You may also make an appointment, office, Skype/FaceTime, coffee shop.

Critical Thinking

Introduction

There's thinking and then there is critical thinking.

If a baseball bat and a ball cost a total of $1.10, and the bat costs $1 more than the ball, then how much does the ball cost?

If you answered ¢10 you were thinking. If you answered ¢5, you were thinking critically. The bat costs a dollar more than the ball. This example comes from a book you should read called Thinking: Fast and Slow, by Daniel Kahneman. The problem is our brain inclines us toward thinking the answer must be ¢10, and because we saw that "truth" in a flash, we embrace an error as if it were a fact, might even fight to defend it. If you dislike something you don't understand, that's thinking. If you like something because you are familiar with it, that's thinking. If you hang on to your initial opinions in the face of contradictory evidence, that's thinking. If you dislike people who disagree with you, that's thinking too. As you can tell from that list of inclinations toward errors, you should give up thinking. We resist critical thinking because thinking, going with our gut or our traditions, is fast and satisfying. The only thing more desirable than getting the right answer eventually is getting the wrong answer quickly. Critical thinking, on the other hand, is slow and cognitively taxing, and we don't always know the math (or logic or what someone else is thinking); we don't always know what we need to know in general and that makes us want to jump to something we do know rather than take the time to learn what we need to learn. A couple hours pondering hard problems and you're tired, everybody is.

A traveler comes to a fork in the road heading to two villages. In one village, the people always tells lies, and in the other village, the people always tell the truth. The traveler needs to conduct business in the village where everyone always tells the truth. A man from one of the villages is standing in the middle of the fork, but there's no indication of which village he reside in. The traveler approaches the man and asks him just one question. From the man's answer, he knows which road to follow. What is the question? (David Lieberman, You Can Read Anyone)mouseover for answer

Getting the right answer doesn't help if you asked the wrong question.

What follows is more of a parlor trick than a bit of directly instructional reason, but it makes a useful point. Play along.

- Pick a number between 1 and 10.

- Multiply it by 9.

- Add together the two digits of the new number.

- Subtract 5.

- Now, if 1 = A and 2 = B and 3 = C and so on, what letter is represented by your number?

- Next, think of a European country that begins with that letter.

- Then take the last letter of that country and think of an animal that begins with that letter.

- Finally, take the last letter of that animal and think of a color that begins with that letter.

I bet you a dollar I know what your answers are.

If I was right, it's because of how the number 9 works. Any number you chose of your own volition would lead to the number 4. Given the rules of the game, 4 = D and the subsequent rules led to the answers I bet you gave. That's not magic, it's manipulation. My point is simply that getting the right answer can distract you from looking more closely at the questions you are trying so hard to answer.

If you go into an observational setting thinking you know what you are looking for, your powers of concentration may keep you from seeing something important.

The goal of critical thinking is to overcome egocentricity. The goal of workplace-based writing and research is to overcome corporate bias.

In addition to inertia and over-confidence, critical thinking is impeded by how our brains work when unsupervised (cognitive biases) and by errors in logic (fallacies) and errors in statistical inference. What follows is an overview of those three sources of errors in judgment and decision making. You should make a point of remembering the concepts that follow. You should also make a point of daily searching for examples in your world, in your own thinking and other people's thinking as well. It is always easier to find these errors in other people's thinking than in our own. So you should seek feedback from people who don't see the world the way you do.

Be advised, however, that knowing these errors in thinking does not inoculate against them. Human beings are hard-wired to these defaults. Being constantly vigilant (and skeptical) will help, but you will succumb to default thinking from time to time anyway. We all do.

Cognitive Biases

Na´ve realism -- believing that you see the world clearly and understand it perfectly. If you hear yourself saying, "in reality" or "obviously" stop and think critically. What assumptions are you making that make it obvious to you that might not be shared by others? Two people can look at the same object and see something different, either because they are looking at the same thing from different angles or because they interpret the object differently. The latter happens most often when the object is abstract, like a concept or an idea. Just as the objects in our field of vision are assembled by our brains and interpreted by our minds, so our expectations, experiences, and assumptions influence what we infer about the parts of the world we can't see directly. "He must have been guilty or else why would have have run?" (What it Means, Drive-By Truckers) is a rhetorical question for some people (the answer implicit in the question) but for others it's a real question. Perhaps he ran because experience taught him that cops are dangerous.

- Just because it's obvious doesn't mean it's true.

- Just because you're certain doesn't mean your right.

Apophenia -- the human tendency to see patterns in random data, to mistake coincidence for meaning. Can you see the outline of a dolphin in the rose (image, right)? While there's nothing inherently wrong with seeing faces in toast or trees in clouds, basing arguments or beliefs on random patterns is very uncritical thinking, especially since once we come to believe something, it is very hard to un-believe (or unsee) it. [Technically, the dolphin is an example of pareidolia, which is a subset of apophenia.]

Given: 1,3,5,7; what's the next number? 11? Sure, if it is safe to assume that the first four items in the set are there because they are the prime numbers in sequence. What if that's not why they are there? What if you were looking at a code of some kind and the regularity of the numbers hasn't yet manifested itself? Maybe the next number is 16 and the one after that is 13. In that case you made a false, albeit reasonable, assumption. If you run a random number generator long enough, printing each randomly generated number as it was generated, eventually you would see the first four primes in sequence. But they wouldn't be the first four primes, just those numbers randomly displayed in what could easily be mistaken for a specific sequence.

Let's say you are trying to figure out why people like to drink coffee in the coffee shop in the library instead of leaving the building to get coffee elsewhere and you ask that question of the first person that walks in the door. Would you bet the next person would have the same reason? Maybe if you asked 20 people and discovered that there were only four answers, then you might bet a small amount of money on number 21 having one of the four answers. But that person might have a fifth, as yet unheard of answer. How many library goers would you need to ask before you achieved saturation, the point where you haven't heard a new answer in so long that you can safely figure any new answer could be treated as unique or at least so rare as to be irrelevant? Well, how much longer can you afford to stand there asking people as they walk in?

- Just because you see it clearly doesn't mean it's real.

Confabulation -- making up a story to explain what you can't understand (mythologizing). People dislike doubt so much, and want so badly to feel smart, that we will fill any gap with a story and then accept the story as truth. Often, the story replaces the experience and fiction is all that's left.

- Just because you can explain something doesn't mean you understand it.

- Measure all explanations against the evidence.

- Suspend judgment in the absence of conclusive evidence.

The illusion of coherence -- just as we will mythologize to avoid confronting our ignorance, we will assimilate (explain away) or dismiss (ignore) any evidence that contradicts our beliefs. Human beings, most of us anyway, are so troubled by ambiguity that we would rather be wrong than uncertain. But painting over the cracks doesn't fix the foundation.

Stories are powerful because they make sense of the world for us, they make action meaningful, and they justify behavior, good or bad. But they are just stories; they are not reality. Reality includes a bunch of noise and a bunch of data we mistake for noise, as well as a bunch of noise we mistake for data.

- Embrace ambiguity.

- Learn to live with uncertainty.

- Look for contradictions and anomalies.

Confirmation bias -- we humans are attracted to evidence that supports our beliefs and we tend to ignore or discount contradictory evidence. Once we believe something, we are hard-wired to find proof everywhere. Because we also tend to like people like us, that is who have similar views, we tend to surround ourselves with people who confirm our prior beliefs and thus it is even harder to think critically. This bias for confirmation is exacerbated by the way our Internet practices tend to filter what we see to conform to our preferences, our likes, our friends' likes, and so on. It's quite easy to mistake the block where you live for the whole world.

- Just because you have evidence doesn't mean you're right.

- Seek disconfirmation, counter arguments.

Fundamental attribution error -- believing that your failures are caused by bad luck, your successes by hard work, while others' failures are the result of character flaws, their successes just luck or a system rigged in their favor. People who tend to make excuses and blame others when they aren't getting what they want are gripped by the fundamental attribution error. The reality is closer to the fact that every one of us lives in a set of interrelated systems, some support us, others may impede us, but none of us succeeds or fails alone.

- Don't assume the best of yourself and the worst of others.

- Own your own errors.

- Acknowledge that luck and other peoples' contributions may have helped you succeed.

Normalcy bias -- thinking that everything is just fine when disaster looms. A great example of this kind of thinking en masse was the Atlanta "Snowpocalypse" of 2014. Two inches of snow was forecast for metro Atlanta, and everyone went about their business as usual. Next thing you know, the roads got icy, the highways backed up; the surface streets gridlocked, and thousands of people spent the night in their freezing cars.

Availability bias -- letting your personal experience influence your perceptions and inferences. Your experience might not be representative or even relevant. If you watch the news, you are likely to have a more negative worldview than if you don't, largely because "the news" means homicides, domestic violence, kidnappings, spectacular car accidents, fires and so on. These dramatic images color your worldview if you don't contextualize them. The availability bias enables the "information bubble" that most of us live in because the examples we use to enable our thinking tend to come from reinforcing sources.

In the context of workplace-based writing and research, availability means you need to pay special attention to the order you ask questions in. One question may cause a person to think about something that changes their mood and thus influences how they answer the next question or questions. Put that question lower down in the list and the bias might go away.

- Don't use personal experience, or current events, as evidence.

- Get a broader perspective; increase the sample size.

Framing -- How you see something, how you define a situation (Is this a threat or an opportunity? A problem or a possibility?), directly effects how you respond. If you can look at things from different perspectives you can capture insights that might lead you to different responses and therefore different decisions. If someone offers you a choice between two alternatives, you should always think: what about both or neither? The wider your perspective, the more options you will see, and the less likely you are to get tunnel vision, like the person who is so focused on a goal that when he achieves it, he has no idea what to do next.

Logical Fallacies

You've no doubt taken a basic Philosophy class and so are familiar with the logical fallacies. If you haven't or you don't remember them, you should look them up. For our purposes, there are only a few of special importance.

A business proposal is an argument. It offers a description of a situation that indicates a problem and proposes a solution. For it to be successful, it has to be convincing. To be convincing, you need to focus on the evidence. The proposal itself may not be evidence heavy, depending on the nature of your intended audience, but you need to know what you are doing.

- Post hoc ergo propter hoc (after this, therefore because of this)

- Whenever we have a problem or see someone else experiencing one, we tend to look for the cause because it makes sense that if the cause is addressed, the problem will go away. There are at least three pitfall here that need to be avoided. Sometimes there's no going back. And sometimes there's no single cause. Often, however, we will seize on the last thing that happened just before the problem occurred and consider it to blame. We all have a tendency to look events as if history were a line of dominos. This happened, then that happened; so this causes that.

Look for causes but don't assume that because B, therefore A was the cause. There may be more than one. Or you may be looking at a correlation, not a cause. - Whenever we have a problem or see someone else experiencing one, we tend to look for the cause because it makes sense that if the cause is addressed, the problem will go away. There are at least three pitfall here that need to be avoided. Sometimes there's no going back. And sometimes there's no single cause. Often, however, we will seize on the last thing that happened just before the problem occurred and consider it to blame. We all have a tendency to look events as if history were a line of dominos. This happened, then that happened; so this causes that.

- Correlation is not causation

- If you take a class in Psychology you will hear this over and over again. Whenever two variables change together, we tend to assume the changes are directly related, that one causes the other. Often there is no causal relation. One changes and the other does to. Maybe there's something in the environment that is independently acting on both. Maybe they exist in completely separate context that happen to intersect at the moment we are observing them.

The problem here is the same with nearly all faulty thinking, jumping to the obvious conclusion. Remember Kahneman's baseball bat. - If you take a class in Psychology you will hear this over and over again. Whenever two variables change together, we tend to assume the changes are directly related, that one causes the other. Often there is no causal relation. One changes and the other does to. Maybe there's something in the environment that is independently acting on both. Maybe they exist in completely separate context that happen to intersect at the moment we are observing them.

- Arguments from authority (I'm smart; do as I say!), tradition (We've always done it this way), intimidation (Do as I say or else!), social pressure (Everyone else is; FOMO), and other extraneous (aka red herring) assertions.

- When it comes to your business proposal, you don't want to use any of these strategies. You don't want to strong arm people into thinking they have a problem, especially when they don't have the problem, and you don't want to argue for change or resistance to change when the best arguments you can make are based on the way things currently seem to be.

If there is evidence, focus on the evidence. If there is no evidence, then find it or recast the question so that you better understand what evidence there is. Remember that the absence of evidence doesn't prove the contrary, but also therefore that nothing is true or false until proven true, the opposite then, and only then, being false.

Proof or nothing is a hard standard to achieve and very hard to maintain, especially if disaster or the image of it is vividly apparent. The more stressed we are, the quicker we think, and quick thinking leads to errors in thought and judgement.

Bullshit ain't cake no matter who says it is, or how loudly or how often or who else says it is, no matter how scared you are. Try to focus on the evidence.

One caveat: if you don't understand the evidence and people with more experience and expertise say they do, and make an honest effort to explain it to you, then you should probably suspend your disbelief. - When it comes to your business proposal, you don't want to use any of these strategies. You don't want to strong arm people into thinking they have a problem, especially when they don't have the problem, and you don't want to argue for change or resistance to change when the best arguments you can make are based on the way things currently seem to be.

- Over generalization

- If a movie got two stars, does that mean it's lousy? Strong evidence isn't wide evidence; loud shouldn't be convincing on it's own. How many people voted? What did they give more stars to?

If everybody you know loves (or hates or is indifferent to) something, you may be inclined to think everybody feels the same way. That's an overgeneralization because the people you know are not a representative sample of the whole population. Unless you are a connector with thousands of random people on your network or an influencer who can shift public opinion just by holding one (Malcolm Gladwell, The Tipping Point), the people you know are a selected sample and therefore proof of nothing on their own. - If a movie got two stars, does that mean it's lousy? Strong evidence isn't wide evidence; loud shouldn't be convincing on it's own. How many people voted? What did they give more stars to?

- False analogy

- If two things are the same, then what is true of one is true of the other. If they are not the same, however, then what is true of one will only be true of the other if they have the relevant characteristic in common, if they are similar in the right way. If they differ in the right way, then no matter how much else they have in common, the argument is an argument from false analogy.

The less we know about something the more likely we are to miss subtle distinctions, distinctions that might render an argument from analogy false. So whenever you find your self making an argument from analogy, from two things being similar, ask yourself if they are similar in the right way or just similar in lots of ways or even maybe just one big distracting way.

False analogies often lead to false assumptions, especially when we don't notice that we are assimilating two different things. No child is identical to his or her parents. No member of a group necessarily has all of the characteristics of that group. Similar isn't the same. Or, as the popular Thai expression says it, same same but different. - If two things are the same, then what is true of one is true of the other. If they are not the same, however, then what is true of one will only be true of the other if they have the relevant characteristic in common, if they are similar in the right way. If they differ in the right way, then no matter how much else they have in common, the argument is an argument from false analogy.

- The fallacy fallacy

- Just because an argument is faulty doesn't mean the conclusion is false; it just hasn't been proven. The absence of evidence isn't proof of innocence, it's only a clear indication that prosecution would be wrong; hence the presumption of innocence.

A sound argument isn't necessarily true either. From a logical perspective, you can derive a sound conclusion from a false premise if you get the form right, even though what you've just proven is not true. Where your thinking comes from can dictate where it goes. - Just because an argument is faulty doesn't mean the conclusion is false; it just hasn't been proven. The absence of evidence isn't proof of innocence, it's only a clear indication that prosecution would be wrong; hence the presumption of innocence.

- Argument from mere assertion

- Saying that something is true doesn't prove it is. Seems obvious, but in fact, we tend to believe what we've frequently heard, especially if it's being shouted at us.

Logic and Emotion

For hundreds of years people assumed that emotions warped decisions because there were plenty of examples where a person or people decided to do something and then later regretted their decision. From this perspective, a perfect world would be a perfectly rational world. We now think that pure rationality is rare and in fact a cognitive disorder (called Alexithymia) when present. We need to feel in order to think critically. We just need to be aware of our own states and the states of others. If a person is indifferent, knowing why may be important. If a person is agitated or distracted, their state suggests they may change their mind later when they come down. That doesn't mean what the think while stressed is necessarily wrong, only that they may think differently once they come down.

Feelings are caused by the feeler's interpretations and inferences, not the actions of others. As the authors of Crucial Conversations say, other people don't make you mad; you make you mad. As the Daoist tradition has always maintained suffering comes from within.

Common Statistical Errors

- Na´ve Statisticism

- Believing that numbers don't lie, confusing statistical artifacts with actual phenomenon ("average" is a numerical concept, not a real thing; there are no average people), failing to realize that numerical precision is not proof of accuracy.

- Law of small numbers -- we tend to think that our personal experience represents a valid sample; it doesn't. If you want to model a statistical probability, you need multiple iterations. In fact, there is a real law, as opposed to a fallacy, called the law of large numbers that says that the more iterations you have, the closer you get to the real odds

- Below is a simulated coin toss. Each time you click one of the 4 links below, you will return to this page. Click on Common Statistical Errors, scroll down and you will see a row of randomly generated 1s and 2s, with the totals represented has heads and tails. The rule of large numbers indicates that the greater the number of trials, the closer you will tend to get to the statistical average. In other words, a smaller sample is more likely to be skewed than a larger one. Given enough iterations, you will see the average tending toward the calculated odds.

In statistics, the mean is the sum of the numbers in a set divided by the number of elements in the set, what we commonly call the average number. One would expect to get an average close to 1.5 in the case of a coin toss, assuming 1 = heads and 2 = tails (3/2 = 1.5.). So the more times you toss the coin, the closer you would expect to get to 1.5. But of course you could get an even split with just two tosses.

With a coin toss, we can only come up with an average if we identify heads with a number and tails with another number, like 1 and 2, but given that a coin is either a head or a tail, the idea of an average isn't real. We can get the number, but it has no referent in the real world. That may or may not be a problem, depending one what we are trying to do, but it's a point worth remembering since people often mistake numbers for reality. Precision often mascarades as truth.

mode = whichever is most frequent in a given trial, there is no mode in this case.

heads

tails - Below is a simulated coin toss. Each time you click one of the 4 links below, you will return to this page. Click on Common Statistical Errors, scroll down and you will see a row of randomly generated 1s and 2s, with the totals represented has heads and tails. The rule of large numbers indicates that the greater the number of trials, the closer you will tend to get to the statistical average. In other words, a smaller sample is more likely to be skewed than a larger one. Given enough iterations, you will see the average tending toward the calculated odds.

- The gambler's fallacy

- Believing in a run of luck, that if it happened before it will happen again or that if it's been going on for a while now, it's bound to change. When it comes to statistical phenomenon, what happened before or might happen next is irrelevant. If a coin lands heads up 3 times in a row, are the chances of it landing heads up the next toss better, worse, or equal to 50%? If you answered 50%, you avoided the gambler's fallacy. Every time you flip an unbiased coin the odds of heads is 50%. And because there are only two possible outcomes, two elements in the sample space, the odds of tails is also 50%. By the way, What are the odds of either heads or tails? 100%.

What are the odds of a coin landing heads up 4 times in a row? You calculate the odds by raising the probability of a single event to the power of the number of iterations.(P)# of trials

Since a coin toss has a sample space of two outcomes, it will always land either heads or tails, the odds of heads up is 50% or 1/2. If we toss the coin 4 times, the odds of all heads is 1/16.

What are the odds of 10 heads in a row? (1/2)10 or 1/1024. If you toss a coin 10 times, 1024 times in a row, you will likely see a run of 10 heads at some point before you run out of trials. Because a coin has only two sides, the chances of seeing a run of tails is the same and in fact, the chances of seeing any kind of run --THTHTHTHTH -- are the same.

So if someone said here's a coin, I bet you a dollar it will come up heads 4 times in a row, inspect the coin or just walk away; 1/16 aren't great odds. If you're feeling lucky, be prepared to give away a dollar. If the coin comes up 3 times heads and they offer double or nothing, should you take the bet? Your odds are now 1/2? You went in expecting to lose a dollar, so winning 2 would be awesome. Odd thing though, if you lose, handing over that dollar will feel twice as bad. Did you remember the rush before or the pain after the loss more exquisitely? If you remember the rush, you are a gambler. - Believing in a run of luck, that if it happened before it will happen again or that if it's been going on for a while now, it's bound to change. When it comes to statistical phenomenon, what happened before or might happen next is irrelevant. If a coin lands heads up 3 times in a row, are the chances of it landing heads up the next toss better, worse, or equal to 50%? If you answered 50%, you avoided the gambler's fallacy. Every time you flip an unbiased coin the odds of heads is 50%. And because there are only two possible outcomes, two elements in the sample space, the odds of tails is also 50%. By the way, What are the odds of either heads or tails? 100%.

- Retrospective statistics

- A retrospective study looks at people in the same condition or state and looks backward to find commonalities that might suggest a cause for how they got there. In contrast, a prospective study starts at now and watches changes over time. Ideally one would hope for some predictive ability to come from either kind of study. But the problem with retrospective studies is selection bias.

Let's say you are interested in business success. So you find the 10 richest business people and look for commonalities in their backgrounds. Let's ignore the fact we defined success as wealth, a debatable assumption. Let's instead focus on the sample. Everyone we are looking at is a success. Every commonality we can find suggests a relation to success, but we don't know about people who also had that trait or experience and never were successful. Our sample was biased to begin with. - A retrospective study looks at people in the same condition or state and looks backward to find commonalities that might suggest a cause for how they got there. In contrast, a prospective study starts at now and watches changes over time. Ideally one would hope for some predictive ability to come from either kind of study. But the problem with retrospective studies is selection bias.

- Predictive analytics is a foreword looking application of retrospective statistics.

- The classic example is trying to predict what a given customer will buy based on what similar people bought at the same point in their purchasing past, where similarity is defined largely by purchase history. Let's say you know from tracking credit card purchases that 90% of people who bought 5 specific items, purchased item X next. That would suggest that if there were a run on the 5 precursors, you better beef up supplies of item X. If you had finer-grain data, say % of times people bought Y or Z instead of X, then you might be able to offer a coupon to guide people toward Y or Z if they had better margins than X.

Let's say someone gets a puppy or a kitten. What do they tend to buy next? Food, toys, a bed, litter accessories, collars, etc. So, if the local rescue organization sells PetSmart your purchase info, a coupon is sure to be coming your way. When you graduate from college or buy a new home or a new car, or get married, or have a child, you will see similar seemingly "random" and yet serendipitous offers coming your way. Predictive analytics work because human beings tend to conform to type. It's too harsh to say we are herd animals, but tribal animals might be about right. How many of you who went to prom bought a corsage or a boutonniere? Is that still a thing? Each of us is an individual but we tend to conform to one or two of only a handful of patterns of behavior. Thus our behavior can be predicted with some probability of success.

Retrospective data can enable what is called the Texas Sharpshooter fallacy: Painting a bull's eye on a bullet hole and claiming to be a sharpshooter, seeing a chance cluster of events as proof of a theory you want to see proven. A greater rate of cancer in an area doesn't necessarily mean a carcinogen is present. The alarming number might be merely a statistical effect. Precise though numbers seem, even correctly derived statistics can be me misleading if we attach the wrong assumptions to or draw the wrong inferences from them. Whenever you start with data that has already been collected, you need to be especially wary of fitting a theory to it. Proof will require new data.

The classic example of using insight to overcome the flaws of historical data is taken from WII. The Allied Forces were losing planes with deadly frequency. The planes that made it back were full of bullet holes. They figured reinforcing the metal would make the planes more bullet-proof, but more metal means more weight and too much weight would ground the planes. So, where to put the extra steel?

They took an inventory of the damage patterns on the returning planes and noticed clusters of holes as if those parts of the plane had been targeted. The first thought was to reinforce those places. What's wrong with that plan? What's wrong with the evidence? The planes that returned survived, so the holes in them were non-lethal. By inference, the planes that went down probably had lethal holes. The survivors were full of misses. The dead were full of hits. So, put the metal where the survivors' holes aren't. That solution is blindingly obvious in hindsight, but seeing it before it had been seen, that is the essence of good thinking -- to learn correctly from what isn't there. In this case, the available evidence, the historical record, was distracting because it led people to look at the survivors instead of the dead. (Jordan Ellenberg, How Not to Be Wrong, p 3 - 9.) - The classic example is trying to predict what a given customer will buy based on what similar people bought at the same point in their purchasing past, where similarity is defined largely by purchase history. Let's say you know from tracking credit card purchases that 90% of people who bought 5 specific items, purchased item X next. That would suggest that if there were a run on the 5 precursors, you better beef up supplies of item X. If you had finer-grain data, say % of times people bought Y or Z instead of X, then you might be able to offer a coupon to guide people toward Y or Z if they had better margins than X.

- Prospective or longitudinal studies

- Let's say we start observing 10 kids as they all enter the same kindergarten and keep watching as they progress from grade to grade to graduation and then we compare outcomes. What might we have learned, if anything? They all had the same education or at least they all attended the same school, but is that the same thing? To what extent were they identical when they arrived at day one? Isolating the source of differences is critical to predictability. Absent that, one doesn't know what kind of intervention might produce what result.

Longitudinal studies can be powerful. They are vital to medical advances. But they are also expensive and, obviously, time consuming and thus rare. Using the data from previous longitudinal studies is a common alternative but fraught with the difficulties of historical data. - Let's say we start observing 10 kids as they all enter the same kindergarten and keep watching as they progress from grade to grade to graduation and then we compare outcomes. What might we have learned, if anything? They all had the same education or at least they all attended the same school, but is that the same thing? To what extent were they identical when they arrived at day one? Isolating the source of differences is critical to predictability. Absent that, one doesn't know what kind of intervention might produce what result.

- Target populations (N) and representative samples (n)

- The target population is anyone directly or indirectly relevant. In the case of your business proposal, anyone who suffers from the problem you want to solve and anyone who might be adversely effected by the solution. Because "everyone" (N) might be a big number, you might need to sample the whole, that is find enough representatives of N to get a clear picture of everyone, but a small enough number (n) to be able to interview or survey or watch, depending on how you plan to learn about your population.

- Sampling problems -- all errors in sampling lead to non-representative populations, groups that can't speak for the whole. If you use a non-representative sample, you will necessarily draw erroneous conclusions.

- Non-Representative sample -- If you need to make decisions based on customer input, you need to know that your informants represent all of your customers or at least most of them. If your sample skews, your conclusions will be misleading. If you ask your most frequent customers what they want, their wish list might put less frequent customers off. The better someone understands something, for example, the more features and choices they want. Novice customers, typically, don't want lots of options. They want a clean, clear, path. For the most part. But that's the problem. What might you do for people who aren't customers yet but just prospects? How do you find those people? How many different kinds of those people might there be?

- Cherry picked

- A cherry picked sample contains only people who fit your expectations. You can cherry pick by accident, confirmation bias may highlight the evidence you want and keep you from seeing what you need. Ideally, your study would be anonymous and blind, that is you have no idea who any given participant is and they don't know anything about you or the study.

- Self-selected

- If you ask people to fill out a questionnaire, then you inevitably only have data from people who were willing to fill out the questionnaire. Could that be a problem?

- Too small -- there's nothing inherently wrong with your group, there just aren't enough to ensure all possible positions are covered

- How many people do you need to learn about before you can reasonably assume you've learned everything anyone from the population can teach you?

- Corrupted (temporarily influenced, over eager to please, inclined to lie)

- A corrupted sample is simply one that has a misleading characteristic. If you interview people who have just seen ab effective sales pitch and then use their responses to argue that clearly all people want to buy the product, you've influenced your sample and corrupted the results. Sometimes people will say what they think you want to hear or what they think people should say, because they want to be helpful or they don't want to be wrong or say unpopular things. If you are asking questions about behavior that a person might be ashamed of, some respondents will lie. This could be a simple as just making up an answer so as not to look stupid. If you ask someone is the answer A or B they will likely guess if they don't know. That's why you should always offer "I don't know" or "neutral" so a person doesn't have to feel compelled to guess. If you have to ask someone about something dishonorable or embarrassing, you will either need to trick them into answering it or make them feel completely at ease, anonymous, safe. You will also need to account for the fact that some people will crow about their deviance as a way of dealing with their shame.

Checklist for your business proposal

- What is your N -- the entire population of people directly and indirectly effected?

- What is your n -- the least number of people necessary to get a representative sample?

- How best to gather evidence from them?

- observation

- interviews

- questionnaires

- already available data

- What's missing from you data? (What about the planes that didn't come back?)

- How does that understanding of the people involved help you tailor your solution and your message about the solution?

Our Authority Problem

We Americans, by and large, don't like to be told what to think. Our country is founded on individuality and the idea that everyone is entitled to his or her opinion, no matter how demonstrably false that opinion is. We distrust authority in general, and most of us can think of half a dozen vivid examples of breaches of public trust that seem to warrant our wholesale rejection of authority. Our logic textbooks tell us that arguments from authority are fallacious. Any decent logic textbook, however, also tells us that just because an argument is invalid doesn't mean the conclusion is false.

The problem I have with the wholesale rejection of authority is that we don't always know enough to make good decisions without listening to expert testimony. We have to be able to understand enough science to differentiate science that is currently valid, acceptable to other scientists, from junk science, while still understanding that scientific thought evolves and what is scientifically valid today may be disproven in the future. To say that a theory is just a theory is to fundamentally misunderstand what the word theory means. There is a big difference between a specualtion and a theory.

"A frightening Myth about Sex Offenders" is a disturbing piece of video journalism about how junk science gets written into legislation. The video is clearly (I think) designed to shock and outrage people (the devil term "pedophile" and the opening scene make it's emotional designs clear to me) and as such makes for interesting discussions about rhetoric as well as grounds for considering how what we want to believe influences not only how we see the world but how we change the world to best fit our perceptions of it. The problem with arguments from authority is that they are only as good as the grounds which grant authority and here we seem to have evidence that no less an authority than the Supreme Court can base it's decisions on junk science, thus undermining its authoritativeness without lessening it authority. It's still the Supreme Court, no matter why it says what it says. (link)

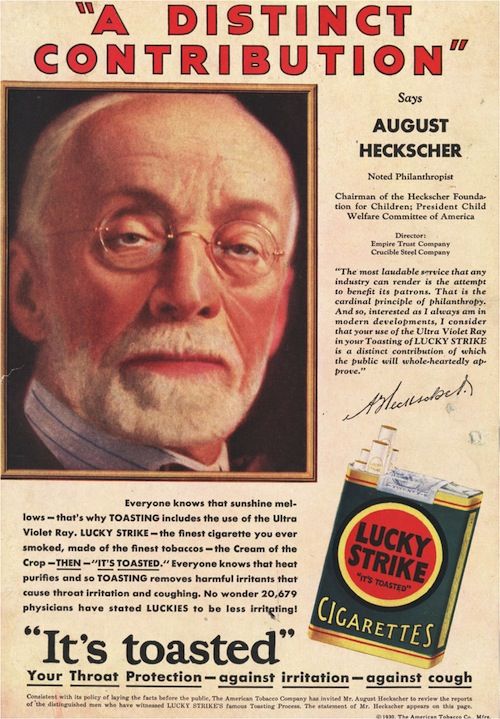

Before you reject an argument from authority solely because it is an argument from authority, you need to understand what the authority's opinions are based on. And if you can't understand, then you may need to confront the unpleasant fact that maybe you should submit to the authority anyway. And yet at the very same time, you can't let an authority abuse it's power or make proclamations beyond it's sphere of knowledge. The Doctors in the 1950's who said Lucky Strike cigarettes were good for your throat because the tobacco was toasted should have had their licenses revoked, but of course by the time the world fully embraced that fact as a fact, they were dead.