Rough Draft

We could have a number of different kinds of conversations regarding AI text generation -- philosophical, technical, pedagogical, practical, bureaucratic. I'm open to any of these and I address each of them in passing in what follows.

I'm not exactly presenting an argument here. I am offering conversation kindling via links to articles and AI text generation services as well as a few very preliminary thoughts. Despite the current BUZZ, I don't think AI generators are just hype. Yet trying to predict what impact these tools will have is a bit like it was trying to predict where the Internet was going back when Prodigy and AOL were jamming your mailbox (or your parents' or grandparents') with CDs. Or what would happen to music publishing when Napster came out of nowhere. Or what impact, if any, Y2K would have. What of TikTok? To say nothing of Non-Fungible Tokens, Bitcoin, and Blockchain. Despite the current lack of clarity regarding AI text generation, wait-and-see will be a maladaptive strategy.

On the other hand, the Supreme Court is currently considering whether or not Meta and Alphabet and the like can be held responsible for driving traffic towards content that leads to criminal activity. The outcome could considerably change the legal landscape for AI generators and that could derail the train we seem to be on. As Michael Wooldridge points out, A Brief History of Artificial Intelligence: What it is, Where We Are, and Where We Are Going. Flatiron Books, NY: 2021. I would love to know what he's thinking about it now. AI has a cyclical history of boom and bust. It's booming again now, even as it lays off tens of thousands of employees.

So you might want to wait and wonder, but I'm encouraging you to experiment with these AI generators, to see what they can and cannot do, so that you can make some experience-driven decisions, albeit provisional ones, about how you want or don't want to use them in your own writing and in your writing classes.

On the left of what you are reading now is a list of articles from all manner of non-academic publications that talk about AI text generation at various levels of hype, from panic to euphoria. Some are far more informative than others. You can decide what's worth reading. Below the articles there's a drop down list box that will take you to services offing AI support for writers. You should look them over and maybe try one or two out, assuming you don't have to pay to play. Your students have probably come across some of these. If you only have time for one, ChatGPT might be the one. Below the dropdown are links to a couple of other sources of information I've found useful, in particular The AI Exchange run by Rachel Woods. She is currently omnipresent on my TikTok For You Page, which I access with my personal phone only. She has a newsletter, a bit wonky, that I've found very interesting.

TL;DR

1) AI generators will be integrated into search engines, writing software, and anywhere else they can be

2) Search will change and with it the advertising model that made Google a verb

3) Creating content to drive traffic to your blog or whatever might go away

4) Businesses built on Search Engine Optimization will morph or die

5) Personality (delivery and style) will matter more than information (how to) or knowledge (what)

Even when coherent, AI text can sound tone-deaf, robotic (link)

6) A freelance who doesn't learn how to use these tools effectively won't write fast enough to get paid

7) Short-form video will eventually dominate communications

8) These AI tools make great draft generators and can be very helpful style and arrangement editors

9) They will transform the job descriptions of technical, professional, and business writers

10) We need to rethink what writing is now and how to teach it

Slightly Facetious Time-line

1994 Netscape gives the world access to the Internet -- people stop going outside

1995 Amazon begins selling books -- book stores begin vanishing

1998 Google launches -- libraries become internet cafes and media centers

2007 Apple iPhone launches -- unmediated access to reality ceases

2010 (circa) robots start grading papers en mass for ETS and the like -- a few years later standardized tests are abandoned

2015 (March 7th) the New York Times publishes If an Algorithm Wrote This, How Would You Even Know? -- journalists are replaced by news aggregators.

2023 robots are writing, drawing, composing music, programming computers, milling parts, and assembling machines, oh, and they can dance

Should we despair?

I put the word work in quotation marks because I think we need to think about what intellectual labor is: What is reading? What is writing? What is thinking? And if they relate, how do they relate? There is a long standing tradition of rejecting reading and writing as the foundation of learning.

370 BCE (circa) Plato Phaedrus 274c-277a writes that Socrates rejected writing on the grounds that it would make people ignorant, arrogant, and therefore unteachable;

1762 Jean-Jacques Rousseau's Emile or On Education asserts (in writing) "The child who reads ceases to think, he only reads. He is acquiring words not knowledge." (III, 130)

Their objections were largely ignored or discounted for various reasons, and literacy prevailed as a primary means of acquiring, even amassing, cultural capital. I think literacy will persist during the age of AI text generators, but it won't be our kind of literacy.

Large Language Model Transformers (LLMT)

Text generators use Large Language Model Transformers, software resources created by computational linguistics and computer scientists that use oceans of text and millions of statistical algorithms to predict what words to put in what order given a prompt. The more specific and precise the prompt, typically, the more useful and human-sounding (convincing) the output. You see predictive text generation in action every time you send an email or text message or enter a Google search phrase. LLMTs are predictive text generators, only rather than just completing a phrase or a sentence, they can compose entire paragraphs, however many and of whatever length in whatever style and on any subject you want.

What Can LMTTs Do?

AI generation is a continuously evolving field and so everything I've written here is subject to change.

To my mind, there's a lot of metaphysical noise surrounding the development of these tools. They are not now and never will be sentient beings -- capable of mourning in anticipation of their death. They cannot think in the sense of spontaneously inventing something unheard or unthought of. However, they can learn -- improve (and degenerate) with feedback -- and they can teach themselves in the sense that they can solve problems they were not specifically trained to solve. They cannot predict the future (though they might highlight an unnoticed pattern -- some people claim you can use them as stock-investment tools). And they cannot generate new ideas. They recombine existing ideas -- they have been called stochastic parrots. Typically their recombinations are acceptable to human readers, when they are, because the statistical methods used to generate the words they return when prompted are algorithmically tested for intelligibility in various ways before they are presented to the prompter. If an AI somehow did compose something new, it would either be nixed by an algorithm for making no sense or it would be articulate nonsense. (rhetoric?)

At the risk of sounding misanthropic, the vast majority of people I've met also recombine what they've read and heard and watched and then present that recombination as though it were an expression of spontaneous thought. I'm doing that right now.

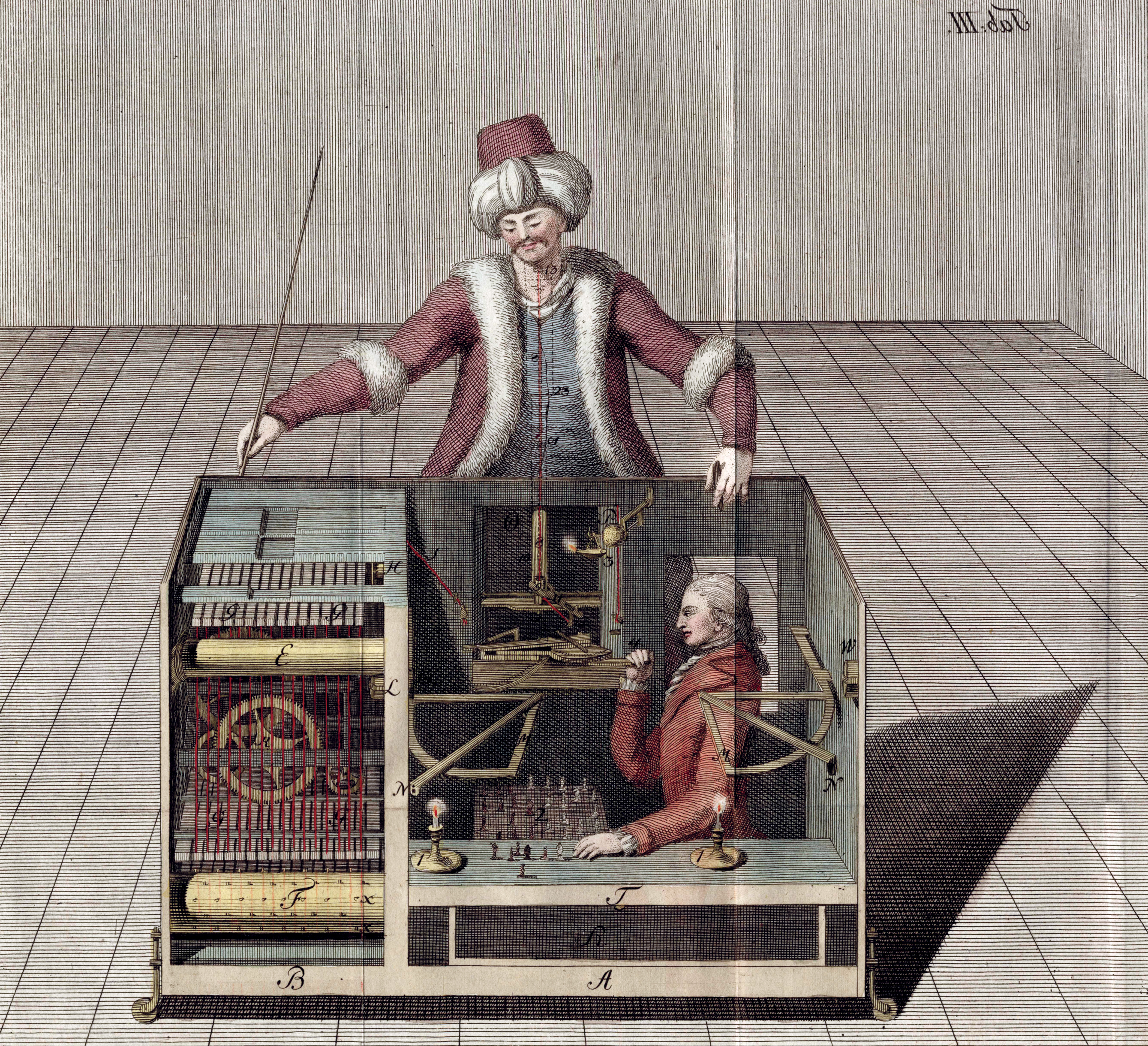

Anthropomorphism is less rhetorical figure and more failure of critical thinking in the era of deepfakes and auto-generated images and texts. If you aren't familiar with the expression Mechanical Turk,

Mechanical Turk

you should be, given that Amazon has a service called Amazon Mechanical Turk, which is a crowd sourcing development service that employs armies of anonymous and I imagine not especially well-paid people to label and clean datasets that are used to train the LLMTs. These anonymous, likely exploited, people are the ghosts in the AI machine.

There's also a lot of media-hype surrounding AI generators. I have over one hundred and fifty articles listed on the left here and most of those I collected in the last few months even though the first entry is from April 2016. This BUZZ was created largely by OpenAI's free release of its LLMT back in 2019. (" AI news-writing system deemed too dangerous to release, "Cat Ellis, February 15, 2019)

OpenAI's ChatGPT is currently the fastest growing app in history. "Feb 1 (Reuters) - ChatGPT, the popular chatbot from OpenAI, is estimated to have reached 100 million monthly active users in January, just two months after launch, making it the fastest-growing consumer application in history, according to a UBS study on Wednesday." (link) There are several competitors and there are many third party startups trying to package and sell AI text generation to different niches -- students and content providers primarily but lawyers and politicians and at least one priest as well as other high profile people have said publicly that they have presented AI generated prose. If it isn't already, it will soon provide medical diagnoses and psychiatric assessments. They will recommend stocks as well. How many of these tools will be around in five years is impossible to predict but the companies that succeed will be swallowed up by the most successful ones. Some things don't change. I can readily imagine that in a year or two we will be using AI integrations by default.

These tools can generate remarkably convincing text. When I went to Essayailab.com, for example, and gave it a one word prompt, "rhetoric", I got the following.

But they are also capable of generating errors, misleading but convincing statements, and just plain waitwhatnow? gibberish. Hover over the two links below to see examples of the latter.

DeepAI on rhetoric and demogogery (deliberate misspelling).

DeepAI on rhetoric and demagoguery.

Those are awful. Most of my experiences so far have been somewhere in the middle of extremes. I have a couple from the most prominent AI generator in the next section that lead me to think we will experience promise and confusion and disappointment and delight as we interact with these things -- and the results of these things -- now and into the future. These tools are learning, after all, and that's perhaps the most important thing to keep in mind.

ChatGPT

Chat Generative Pre-trained Transformer (ChatGPT) is a chatbot provided by OpenAI. It was launched in November of 2022 and has garnered an astonishing amount of media and academic attention. It might be what re-vives the software industry which seems to be shrinking in various ways of late. It could also eliminate the job of software engineer in time. The question for us is what will it (its successors and competitors) do for and to writing as a practice and as a kind of labor.

Chat Generative Pre-trained Transformer (ChatGPT) is a chatbot provided by OpenAI. It was launched in November of 2022 and has garnered an astonishing amount of media and academic attention. It might be what re-vives the software industry which seems to be shrinking in various ways of late. It could also eliminate the job of software engineer in time. The question for us is what will it (its successors and competitors) do for and to writing as a practice and as a kind of labor.

Below are a couple of examples that I generated while playing around.

ChatGPT on Alt Ac

ChatGPT on ethos

ChatGPT: Editor

ChatGPT: A Sonnet on Pastry

ChatGPT: Arrangement 1

ChatGPT: Arrangement 2

ChatGPT: Transitions

Even when they generate coherent text, it can sound uncanny, like it has no definite point of view, robotic in a way, but because it is coherent the tone might not matter if it matches the subject matter or the attitudes of the audience. If I want to make a vegan lasagna I just want a list of ingredients, quantities, and cooking instructions. I don't need ideology or tourism or someone's personal thoughts about, well, anything at all. Other people don't want to make the lasagna; they want to listen and watch while someone explains the joys and intricacies of making vegan sauce from ingredients lovingly grown by hand. Those people will be irritated or bored by robotic text. The real trouble will start when people think they are interacting with a person when they are not.

A person would have to be hermeneutically naïve to think Jane Austen is speaking to them directly when they are reading Jane Ayre, more so if they they think somehow a dead person is communicating with them. The fact that we will be reading more and more never-embodied words might diminish naïve hermeneutics (or worsen its affects). These tools make spewing tons of mis and dis information infinitely easier, and some of that noise will be unintentionally auto generated.

You should generate your own examples, hypothesize and test, so you can see for yourself what you think this thing and things like it might be able to do now and in the future.

As I've played around I've half-consciously pondered what I was trying to accomplish as I played. I wasn't trying to stump it or figure out how it worked, though both thoughts crossed my mind. I was basically just repeatedly asking, what do you make of this... And then thinking a bit about how I might use what it seemed to me it could do. I am very early in this process. Below are a few prompts to get you started if you aren't sure where to start. Once you get started you will think of better ones.

The primary premise here is that used correctly, Chat-GPT and the like can make drafting far more efficient, giving you more time for arrangement and style. But you need to spend more time fact checking than you might have with just a google search process underwriting your invention methods.

Some Prompts to Start Playing With

Writing Assignments Designed with AI in Mind

Filthy Feedback Loops

There's a cottage industry springing up of people who delight in and gain social capital from bending these tools to work in ways their creators didn't intend despite the creators' best efforts to prevent abuse.

In 2016, Microsoft created a chatbot they called Tay, Thinking About You. They gave it a Twitter identity -- "The AI with zero chill." They gave it a Twitter handle, @TayandYou, and then they gave it to Twitter. People would respond to Tay's Tweets and Tay would use that information to Tweet a-new. Humans of Twitter were functioning like a discriminator neural network. One self-learning technique uses what is called a generative adversarial network. This technique pits a generative neural network against a discriminative neural network. One machine makes something and the other critiques it based on a model given by the programmer. Basically the human shows the discriminator what the intended output should be and the generator is given random data to start with. It makes something. The discriminator tests it against the model, then both refine their efforts and it goes again, ideally until the generator produces an example the discriminator can't find fault with. wiki Kind of like graduate school. Only they weren't so much discriminating as antagonizing and provoking. Sixteen hours later Microsoft had to shut Tay down because it was spewing racist, sexist vitriol. The Humans of Twitter trained it to be a Troll after their own image.

A similar thing happened to one of Meta's AIs in December 2022. The Galactica language model was written to "store, combine, and reason about scientific knowledge" (Edwards). According to Edwards, Galactic included "48 million papers, texts and lecture notes, scientific websites, and encyclopedias." The goal was to facilitate and accelerate the composition of literature reviews, Wiki articles, and the like. When Meta offered it to the world to beta test, some people found it promising but others found it problematic and some set out to vividly demonstrate its problems by feeding it prompts that led it to articulate nonsense, in some cases offensive nonsense, as though it were facts. Yann LeCun announced the off-lining of Galactica with a Tweet, "Galactica demo is off line for now. It's no longer possible to have some fun by casually misusing it. Happy?" (link), suggesting malicious human interaction rather than AI defects led to the problems Galactica exhibited.

These two examples suggest that if AIs are going to learn from their own creations and people who don't have a vested interest in those creations are allowed to meddle with them, then all of the problems humans are prone to will be echoed in the AI's creations. If an innocent person encountered something written by such an infected AI, they might take nonsense as fact and could then conceivably exacerbate the problem by feeding it more AI-inspired garbage. See also "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? ( Emily M. Bender, et al. link). The moderators at stackoverflow, a coder's Q and A site heavily used by developers and novices, shut down ChatGPT, for giving bad coding advice.

Garbage in, Garbage Out

In these cases the rubbish input came from malicious users, but the same effect was created when the Urban Dictionary taught IBM's Watson to curse like an internet sailor (wiki). Given that an AI writer could compose thousands of messages a second and instantly publish them on Twitter and Facebook and Instagram, to any digital space, you can readily see how disinformation and misinformation and meddling can be automated beyond most people's understanding. Good information could be spread that way too, but bad information travels faster and what well-meaning soul would think to weaponize good news?

The investment required to create an AI writer is substantial, upwards of $10 million, according to Vinay Ilyengar. While this initial cost limits participation at the development level, OpenAI and others (BLOOM and Eleuther, for example) have made their technology available to developers for licensing fees or for a stake in what is developed. Thus the power to generate entire paragraphs has overcome the barriers to entry that kept it in the hands of linguists, programmers, and people with direct access to venture capital.

One of the other requirements for computational composition is a readily available digital corpora. A corpus is simply a collection of texts, many millions of lines in some cases (Google Books, Wiki, the Guttenberg Project, to name a few), that are used to "train" the AI, a dataset from which it can draw inferences about how to interpret and compose, based on word frequencies, word clusters, regression analyses, standard deviations, and a lot of other statistical processes far beyond my understanding. The corpora available to AI writers is in theory limitless. Any text formatted for web delivery (HTML, XML) can be incorporated. Open AI's GPT-3, for example, currently uses 570 gigabytes of text. (link)

The training materials AI writers rely on is limitless in theory, but often the same set is used over an over again because plugging in existing data sets is much more efficient than finding, acquiring, formatting, and giving new material to the machine. As Kate Crawford explains in Atlas of AI, the biases and assumptions implicit in the training materials are embedded in that material and so are inevitably a part of what the machine generates. While new material is possible in the sense of recombination of materials in new ways, the legacies of the original remain only partially buried in the shifting sand of newly generated text. When Angela Fan, a data scientist for Meta, set out to auto-generate biographies of women scientists to contribute to Wikipedia using Wikipedia as her training dataset, she found that gender biases implicit in Wikipedia had to be overcome before the project could succeed.

Kate Crawford also points out that the environmental and social cost of providing the herculean computational power effective AI requires is arguably unconscionable. That is an important critique, although I only have space here to acknowledge it in passing. If at some future point AI becomes not merely unconscionable but unsustainable, then its achievements will be purely historical. Given the cyclical boom and bust history of AI, it's quite possible that the current boom will become an echo.

So the results of unattended algorithmic composition can range from absurd to traumatic, indicating that human oversight or adversarial machines designed to offer as-good-as human oversight will likely need to become a part of AI's generative practices. Even if we don't know exactly how these machines do what they do, we need to much better understand the ways they can be employed, the limitations inherent in their models, and how we might both use and learn from the facility they can provide. All of which means, I think, that we need to be paying close attention to what these machines produce. And that means we need to remain calm.

So, Are They Any Good?

That they can do what they do as well as they can suggests to me AI generators belong in the pantheon of greatest human achievements, but that doesn't mean they are whiteout problems and just because they are a grand achievement doesn't mean the consequences down range will be wonderful.

AI text generators are not infallible and if the prompt is carelessly crafted, you may get nonsense or worse. There are people putting AI Prompt Writer on their resumes as we speak and in some shops at least, those people may replace writers -- copy writers, technical writers, professional writers, etc -- in the future. We will have content creators but not writers per se.

AI generators are only as good as the samples they train on and those samples typically include all of the frailties, moral and cognitive, that humans are prone to. They are also working from out of date materials because they currently don't have access to the internet. The only source of new information they have is information they generated out of old material. If that material isn't vetted by a person, The output can be vetted by a machine learning technique called a generative adversarial network. This technique pits a generative neural network against a discriminative neural network. One machine makes something and the other critiques it based on a model given by the programmer. Basically the human shows the discriminator what the intended output should be and the generator is given random data to start with. It makes something. The discriminator tests it against the model, then both refine their efforts and it goes again, ideally until the generator produces an example the discriminator can't find fault with. wiki Kind of like graduate school. the machine is engaging in some digital facsimile of wish fulfillment thinking -- learning from its daydreams and hallucinations, as it were. For now at least and perhaps forever, garbage in, garbage out.

In the recent past, AI text generators were prone to user corruption and could spontaneously generate hate speech and vitriol. OpenAI claims to have fixed this problem but there are hackers who have claimed to have circumvented the fix. As Mary Shelley explained to us years ago, the villagers are the monsters.

They are prone to unintentional (they are not minds; they cannot intend) rhetorical chicanery. Rachel Woods has pointed out that if you give ChatGPT a url it will summarize the content found at that url, only it can't because it doesn't have access to the internet. What it is doing is using the key words present in the url to spin up a summary of what statistics suggest those words might be made to say. If the key words aren't helpful, it will give you nothing. But if they are -- like most of the urls you will find at professional publishing houses and newspapers, etc. -- it will give you a coherent riff on the topic. Currently, at least, it is not telling you that's what it is doing. Using these tools without informed, conscious, decision making is like sleeping behind the wheel of a Tesla on Autopilot.

Abbreviated List of Ethical Concerns

- Academic (dis)honesty

- These tools are not plagiarizing, although they can be prompted to compile text in ways that might be mistaken for a famous writer's words if trained to do so

- The texts they are trained on were taken without the writers' consent (or knowledge)

- A student passing off AI generated text as their own is clearly being dishonest and violating a code of conduct, but they are not quite plagiarizing Academic dishonestly doesn't readily apply outside of school. People are using these tools to post blog and Instagram entries many times a day.

- There are now tools to identify whether or not something was AI generated, but they aren't perfect

- You can ask in your prompt for the AI to site relevant sources, so demanding sources alone won't circumvent AI inventions

- You can refine writing prompts and prepare for auto generated responses by running your prospective prompts through an IA generator before assigning them to students

- You could return to hand-writing in class and recitation

- Alternatively, you could teach students how to use AI as a drafting strategy instead of an avoidance strategy

- Banning things that frighten us is a maladaptive strategy

- These tools also summarize text, greatly reducing the length of time it would take to read the work in its original (significantly altering the hermeneutic experience)

- Having the machine summarize your own writing is an interesting revisionary strategy

- Usurious training practices -- relying on underpaid workers from impoverished nations to identify and tag chunks of text to provide training data for the models

- They consume vast resources from electricity to precious metals Crawford, Kate. Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale UP, 2021.

- They re-enforce social and cognitive biases

- They can create persuasive nonsense and mislead the innocent (agentless rhetoric)

- They feed off of self-reinforcing data

- Further dehumanization -- if we are talking to and reading from bots regularly, will we increasingly interact with people as though they are robots and bots as though they are people? Will we cease to distinguish? Or will we get fixated on voice and tone and style in an effort to distinguish? Or ...

Cognitive Consequences?

- What does asking, "Did a computer or a person write this?" do to your relationship with (some kinds of) writing?

- What effect does off-loading drafting and revising and editing have on thinking and on one's composing process?

- What does reading summaries and gists instead of the originals do, other than speed up the process of learning from reading and writing (unless one isn't learning anymore)?

- I can't help thinking that a combination of text summarization and automated report writing could reduce the time to degree by 50% or more.

We need to rethink what writers do and what writing is as we rethink writing instruction

We tend to use the word "writer" without much specification. If you ask someone to name a writer, they will likely name a famous author, a literary writer. Maybe a poet if you ask the right person. But nearly everyone writes on the job and most people who have some kind of knowledge-based employment spend hours writing daily.

A lot of college graduates, even non-English majors, find employment that involves a lot of writing, reports, summaries, social media posts, website content, instructions, and so on. The current AI generators can instantly produce a workable draft of most any of these kinds of writing in a second. If you get good at crafting prompts, you can drastically reduce your time spent writing. Presumably you will need to edit and fact check, but you can offload drafting, revising, and editing (and maybe also

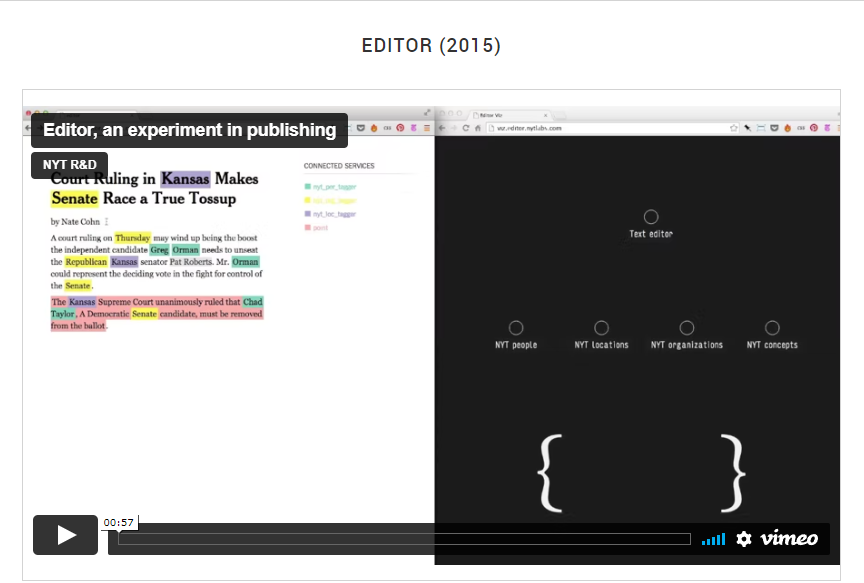

From NYT Labs: "This prototype is comprised of a simple text editor ... supported by a set of networked micro services (visualized on the right). The micro services shown here are recurrent neural networks (using https://code.google.com/p/word2vec/) that are trained to apply New York Times tags to free text, but you can imagine a host of other services that could do things like try to attribute quotes or that know about specific domains like food or sports. As the journalist is writing in the text editor, every word, phrase and sentence is emitted on to the network so that any micro service can process that text and send relevant metadata back to the editor interface. Annotated phrases are highlighted in the text as it is written. When journalists finish writing, they can simply review the suggested annotations with as little effort as is required to perform a spell check, correcting, verifying or removing tags where needed. Editor also has a contextual menu that allows the journalist to make annotations that only a person would be able to judge, like identifying a pull quote, a fact, a key point, etc." (link)

This also means that some of the people who would be paid to write various kinds of text will no longer be needed in that capacity. They will have to learn new skills and drastically alter their existing skills. If I had to bet, I would bet on short-form video becoming the dominant medium in the immediate future. You need some compositional skills, even a script in some cases, but you also need to know how to film and edit film, you need to be able to convey a feeling and invoke a thought, tell a story that compels attention by creating and then fulfilling or seeming to fulfil that desire.

Ever since Google, just having declarative knowledge of all but the most arcane disciplines has been de-valued. Ever since YouTube how-to knowledge has been devalued in the sense that you can do for yourself today many things you would have needed an expert for in the past. This fact has also contributed to the devaluing of expertise in general. I think AI text generators may reduce the value of technical and professional writing as a marketable skill, at least as we have taught those disciplines up until now. AI generators may do the same for computer programming. If this devaluing is going to take place, it won't happen over night. But economic pressure will lead a great many word-based enterprises to reduce their employee rosters in the name of efficiency, I have no doubt. I'm not saying all such job will go away, just that the job descriptions will change radically and there will be greater competition for these new jobs because there won't be as many of them. They will also require a skill set that isn't "just" the ability to write well.

Given that the standard research essay can be generated in seconds by machine, we need to re-think this kind of writing assignment -- many of us have questioned that kind of assignment for years. We might want to shift from exposition and scholarly reporting to personal experience and reflection -- what some people call expressivist writing. Presumably asking ChatGPT, "What do I think about the free market economy" won't yield anything If one had a substantial body of work marked up in a corpus way, that an ai system could be trained to read, then such questions might yield interesting answers, but that condition won't be met in nearly all cases, at least for now. even though asking it, "Contrast a free market economy with a state managed one and provide examples" would yield material from which to write an informed draft.

Learning to write using AI assistants

I think there is an analogy between learning to write using AI drafting/revising/editing tools with learning to write computer code. We've had what software writers call syntax highlighting ever since spell check and these editorial assistances have gotten ever more sophisticated (some experienced writers might say obtrusive or dictatorial but you can tune them and you can turn the off). Often new-coders make progress on a project and then hit a snag, a bit of code that throws an error they can't correct. When this happens, they try to express the problem precisely and if they can, Googling that problem will take them to a site that has the answer. In my experience, that site is often stackoverflow, a community of very patient coders who also write well. Interestingly enough, they recently said they would not publish AI generated answers because they consider them too unreliable. I've also seen a post saying their traffic is down 11% since ChatGPT went live. What this might mean is that some people are asking ChatGPT to write code for them and since it is sometimes capable of writing good code, those people don't need stackoverflow any more, for that task at least. This speculation leads me to think that invention in text may take on a similar process. You have an AI write a draft and then you debug the draft, looking for expert advice from people when stuck. Alternatively, you could hand off your word-salad draft to ChatGPT and prompt it to optimally organize it and then revise it for clarity and brevity.

When I was learning to code I confided in a code-writing friend that I couldn't so much write code as stitch others' code into solutions to my own problems. He just shrugged and said, "That's all anyone does."

A Statement on Artificial Intelligence Writing Tools in Writing Across the Curriculum Settings.

MLA-CCCC Joint Task Force on Writing and AI