How to use AI for generating ideas

Writer's block is real. Blank screens are intimidating. Sometimes just writing down a few ideas quickly -- a list of questions, key words, potential issues, to say nothing of an outline -- requires overcoming inertia. Googling to generate ideas has been very useful and will continue to be so, but now we also have tools that can summarize and organize and present ideas in a way that saves us some initial time reading and sorting raw information. AI can generate anything from a list of key words to an outline to an entire draft formatted for a specific genre, audience, and context. While people who write for a living in many business contexts might be able to work from an AI generated draft, we teachers need to decide, perhaps on a class by class basis, how complete a draft we want our students to have generated for them. One might begin by having students critique an AI draft and then develop a new draft part by part, generating and organizing ideas and then writing a draft from that material. I think the learning outcome of knowing how to craft prompts might be nearly as important as the pre-AI learning outcomes for a writing assignment.

These suggestions assume the writer knows the subject matter they want to write about but that's about all they know at this point.

These prompts amount to a semi-structured warm-up to actual drafting.

These prompts amount to a semi-structured warm-up to actual drafting.

- learn about your intended audience or discover what kind of people might be interested in your intended topic

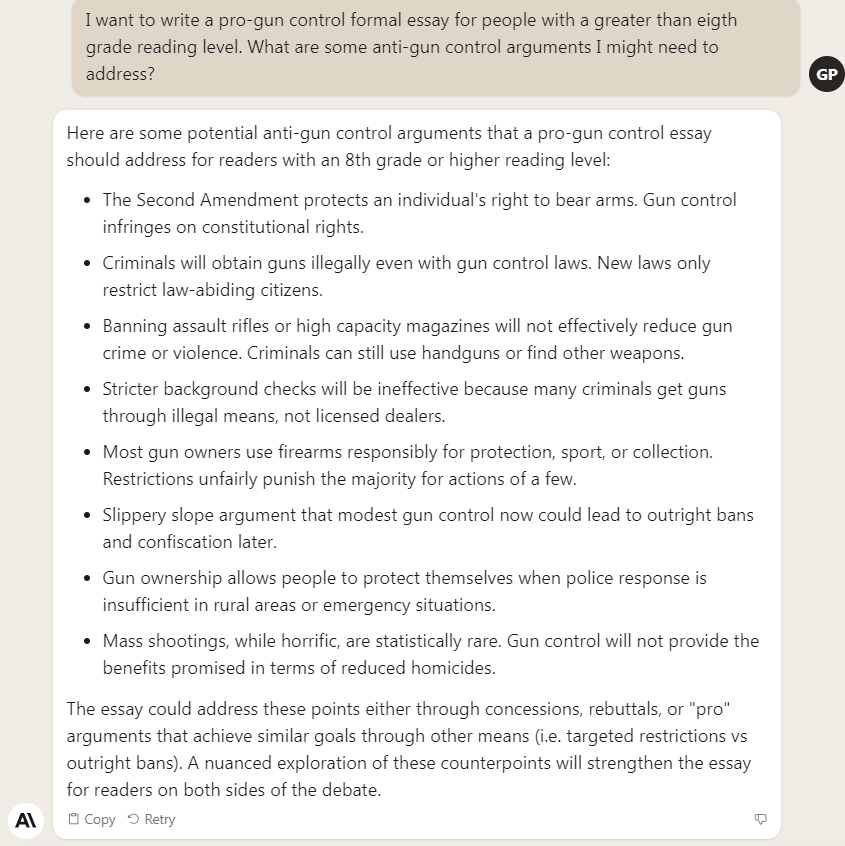

- like the concerns, questions, doubts, needs, core values, people are likely to have regarding your intended topic -- I want to write a pro-gun control formal essay for people with a greater than eight grade reading level. What are some anti-gun control arguments I might need to address? example

- like the concerns, questions, doubts, needs, core values, people are likely to have regarding your intended topic -- I want to write a pro-gun control formal essay for people with a greater than eight grade reading level. What are some anti-gun control arguments I might need to address? example

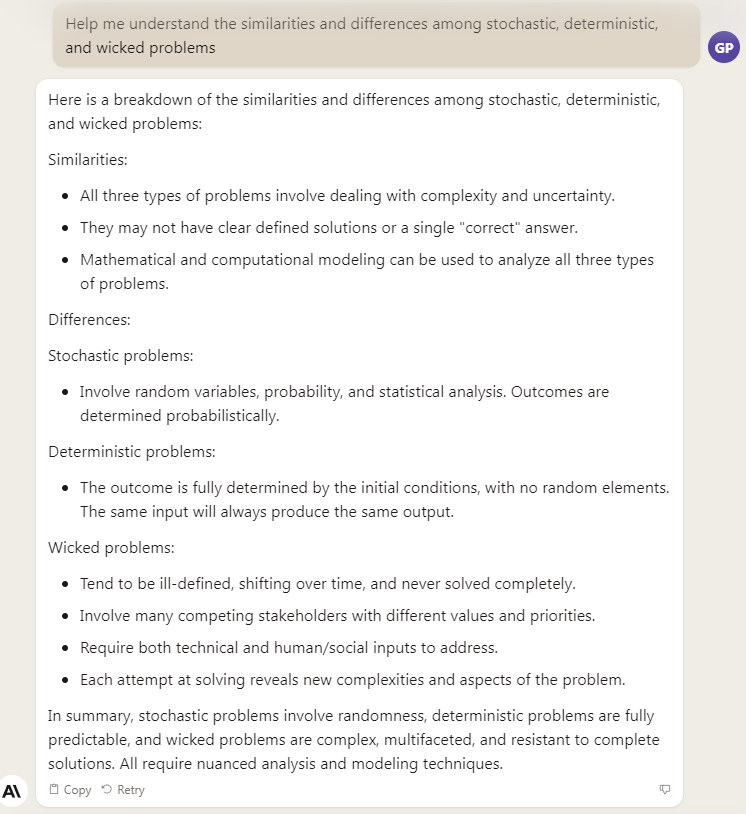

- identify key words and concepts relevant to a subject new to you

- explain those concepts to you so you are sure you understand them -- give me brief history of the Second Amendment to the American constitution. What are some of the relevant Supreme Court decisions regarding it?

- get acronyms and other memory devices to help you learn them -- I need to learn more about both sides of the gun control issue, can you layout a study guide for me?

- perhaps ask to explain a given concept for a different audience -- expert, student, five-year-old -- how would you explain the Second Amendment to a European audience?

- ask for common misconceptions regarding a subject -- what are some problems with how gun injury, fatality and crime statistics are derived?

- differentiate among similar words to make sure you are using words precisely

example

- get analogies to help explore an idea from different angles

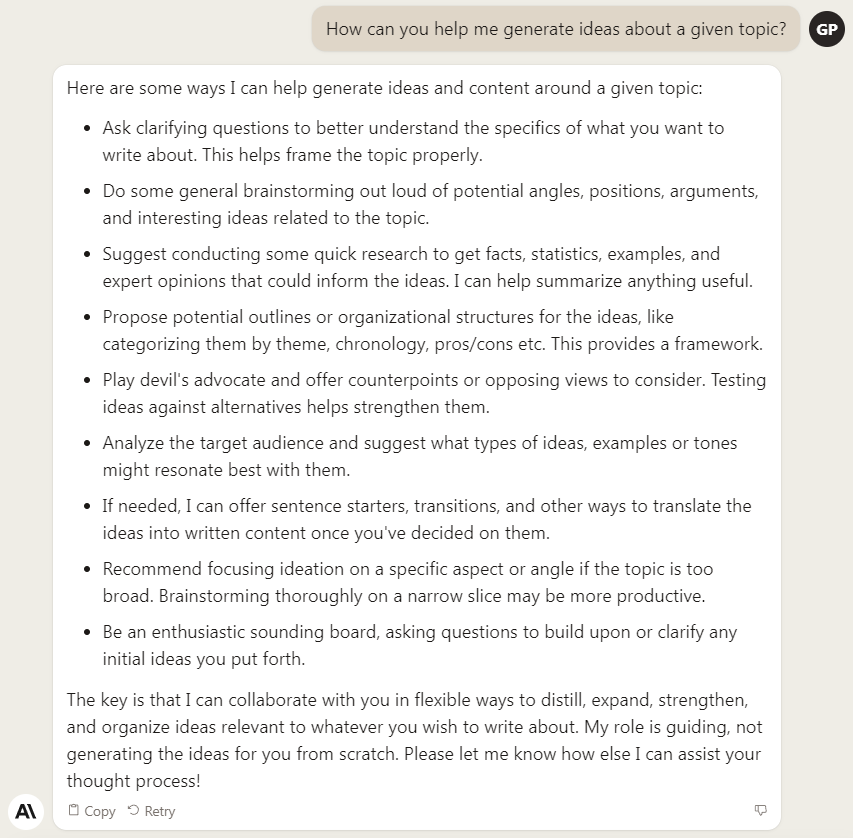

- ask it to offer opposing points of view and arguments each side might take in a controversy you give it

example

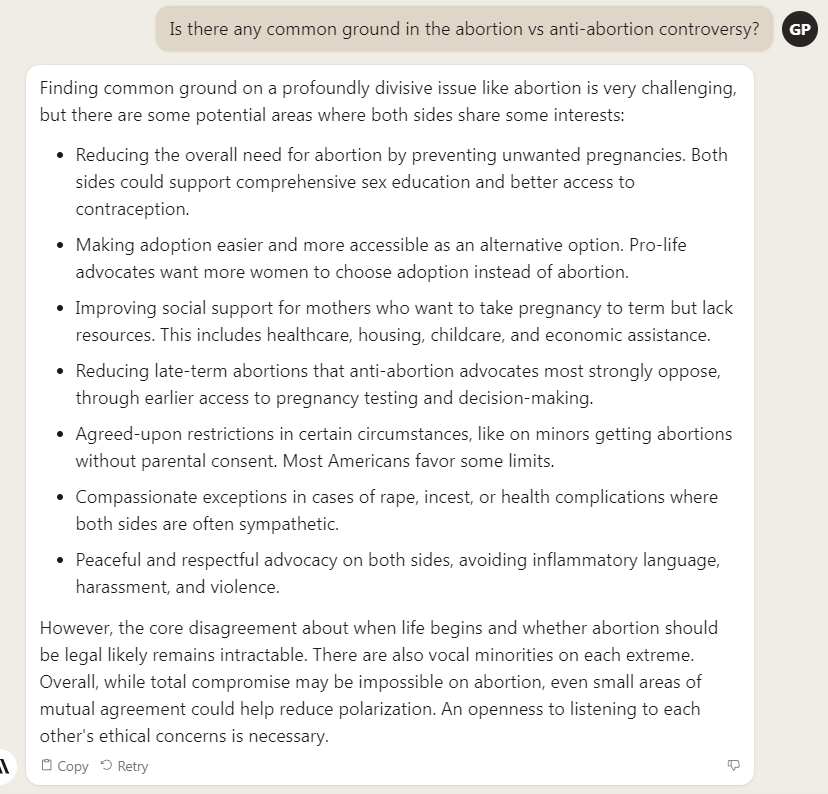

- ask it to find common ground within a controversy

example

- ask it summarize an article you give it to read (Claude)

- ask it to identify biases in your own writing or an article you give it to read (Claude) Some people have noticed what they call a "liberal bias" in the texts AIs generate. Some have suggested creating a right-wing alternative. link

- ask it to implicit assumptions in in your own writing or an article you give it to read (Claude)

- ask it to identify potentially relevant data and sources of information. (Claude - ChatGPT's training ended in 2021).

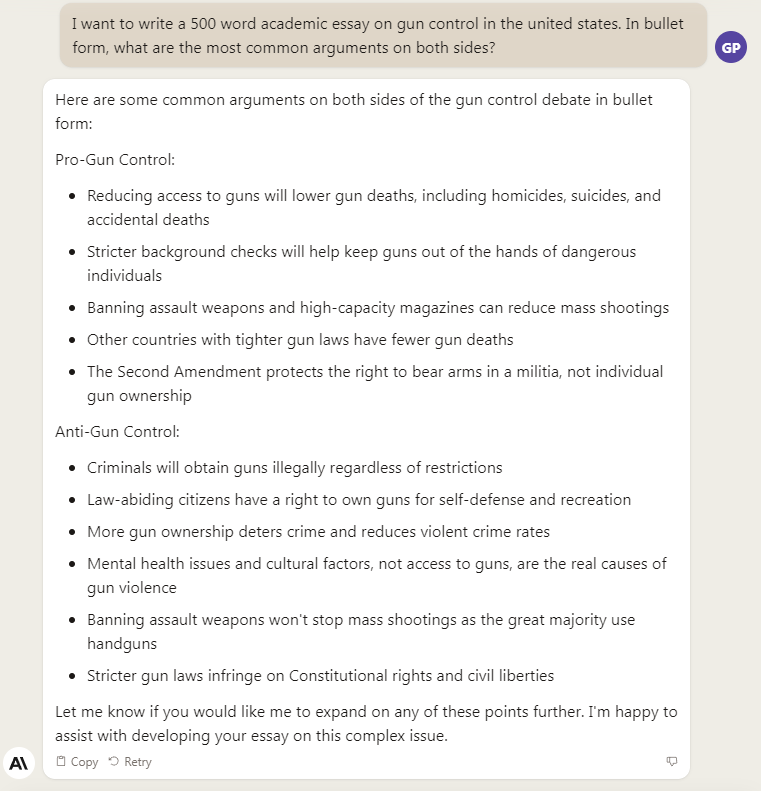

- get an outline of the main points that a given subject might need to cover given a specific audience

example

Sometimes getting started with a piece of writing is hampered by an unclear sense of the genre one needs to produce. In such cases a prompt like -- what are the topics typically covered in X kind of speech for Y kind of audience? Might be helpful. Or, please give me some examples of X kinds of speech

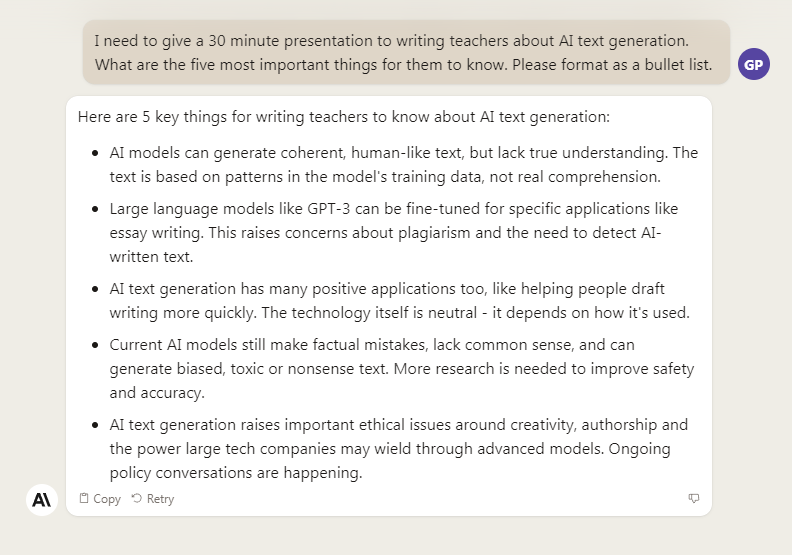

If you know your topic but have no idea where to start, ask an AI to help you generate ideas

example

Remember: these AIs write even nonsense with clarity and conviction.

How to use AI as a co-pilot while drafting

In the not so distant past writers used libraries to access reference texts, thesauri, dictionaries, rhyming dictionaries, encyclopedia, quotable quotations, citation indexes, summaries, and so on to enhance their word choices, to check facts, find supplemental material, test their ideas against ideas others have had, and so on. When Internet Search arrived, we did the same things but with digital resources at our fingertips, without having to leave our desktops, Wikipedia as well as the digital versions of the hide-bound ones we used pre-google. Now we have AIs to do these same things and others, more efficiently and more conveniently. AI is more efficient because you don't need to provide your own summaries nor do you need to know what resource to consult. It is more convenient because it's all there in your browser. You do, of course, have to cross check. But that's not new. Even the Encyclopedic Britannica got things wrong some times. One fast way to cross check is to ask the same question of each of the most commonly available AIs, Bard, ChatGPT, and Claude. If you get wildly different responses, you need to investigate using other resources.

Once you have a topic, a perspective, and a plan or outline, developing effective sentences often requires quick historical or biographical or anecdotal lookups, short branches of thought, some specific word or phrase that will help you keep writing. Here are a few suggestions along these lines.

- get definitions

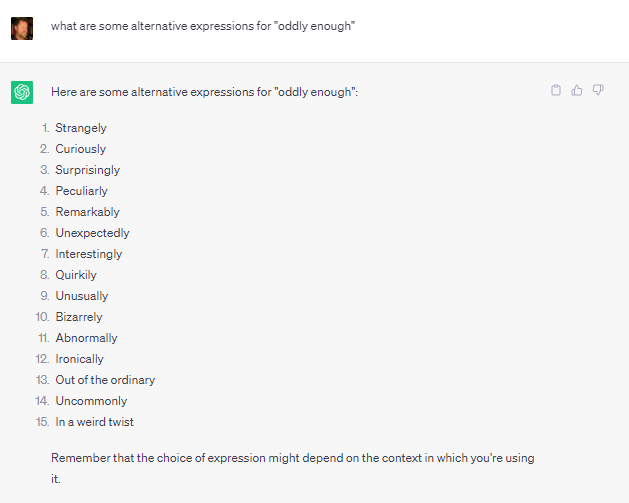

- get synonyms

example

- and ask for each one in a sentence and to explain subtle differences

- get antonyms

- get transitions

example

example

example

- get it to differentiate similar words or words often misused

- get alternatives to a cliche

- get it to recast an idea using a specific rhetorical figure

- give it an example or a description and ask it to name that rhetorical figure

example

- learn about and get examples of rhetorical figures

example

- ditto tropes and commonplaces

example

example

example

- look up potentially relevant historical figures or events

- ask it to critique an argument

- ask it for references and or data or potential sources of data to support an argument you think you might want to make

- ask it to devise a writing schedule for you, how many sessions a day, how many minutes per session given how many pages you need to write and what you deadline is

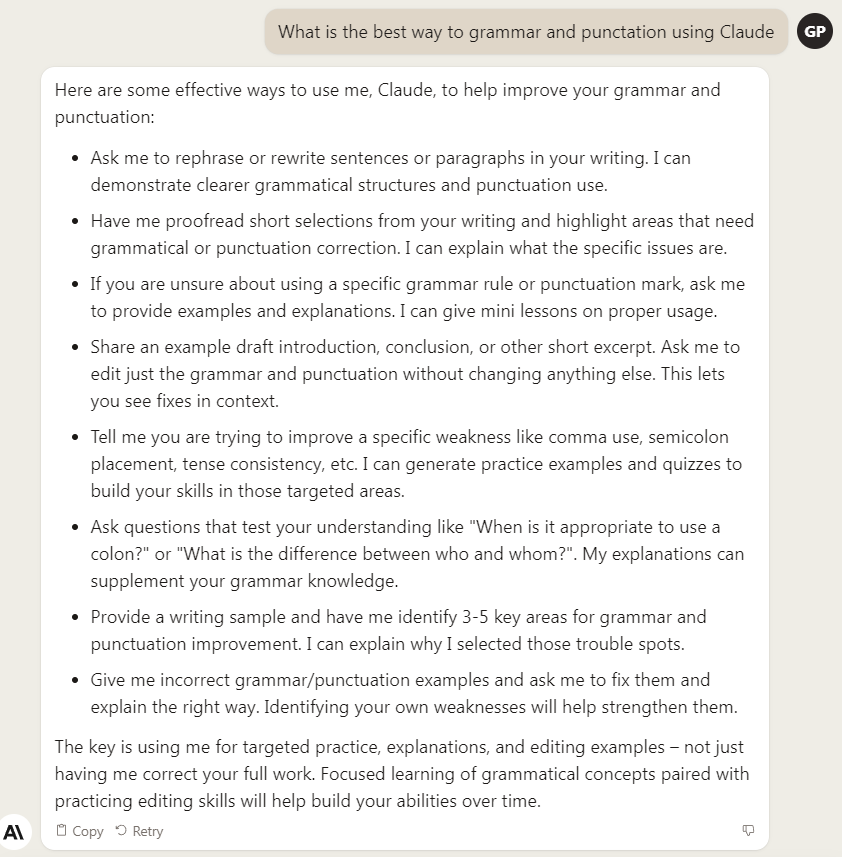

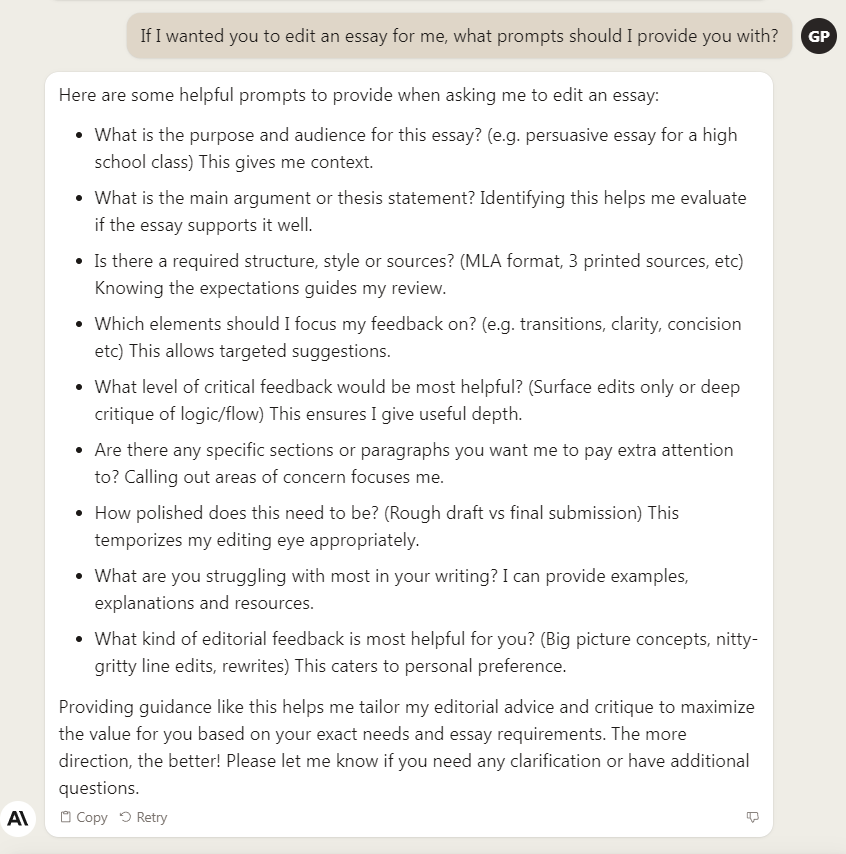

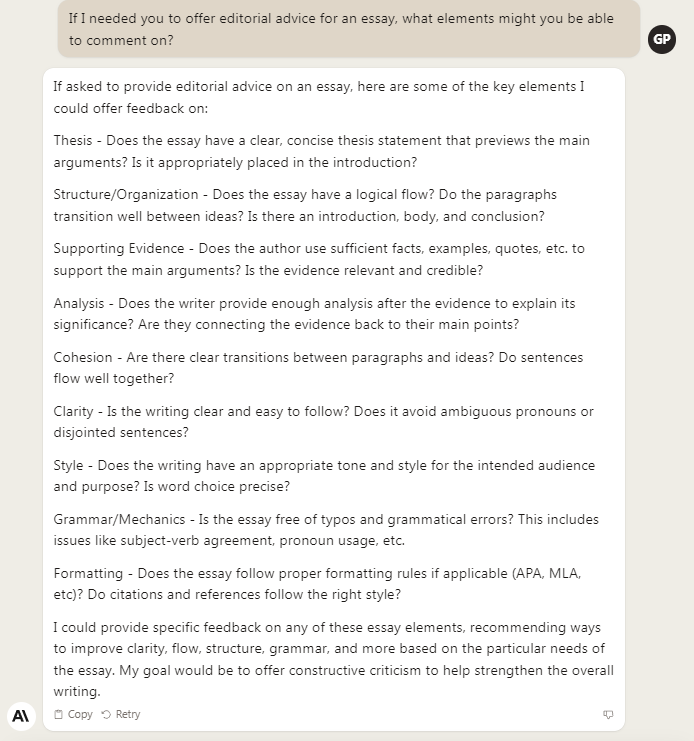

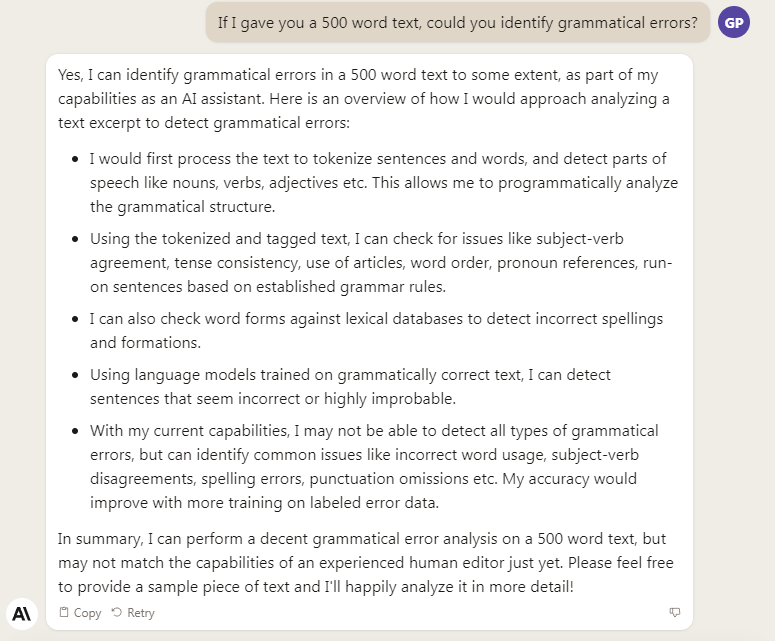

How to use AI for revising and editing

Once you have a draft that you think is fairly complete though not yet finished, you can use AI to help you tighten the sentences, organize a meandering paragraph, catch grammar and punctuation errors.

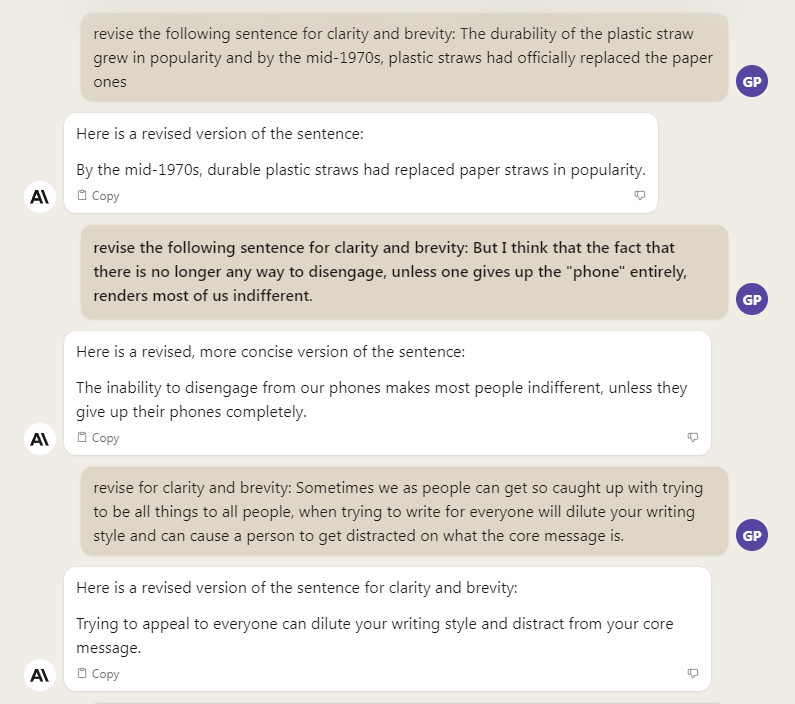

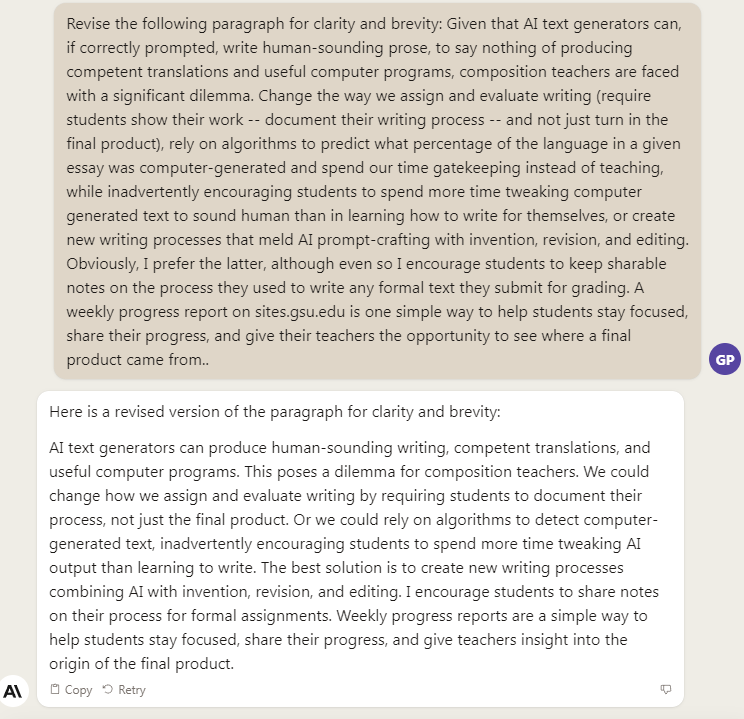

- revise this sentence for clarity and brevity

example

- revise this paragraph for clarity and brevity

example

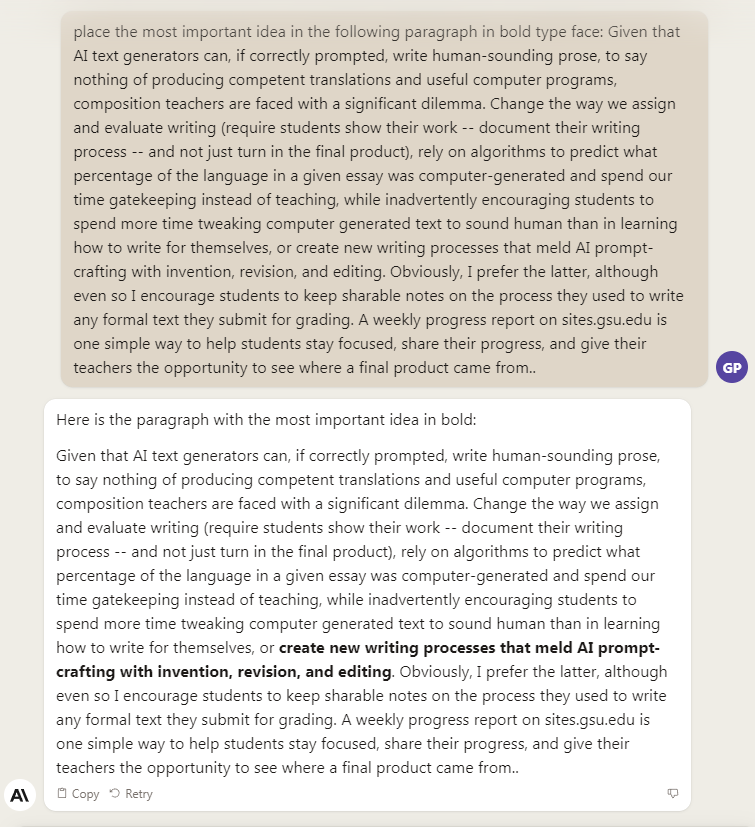

- what is the main or controlling idea of the following paragraph -- unbury the lead

example

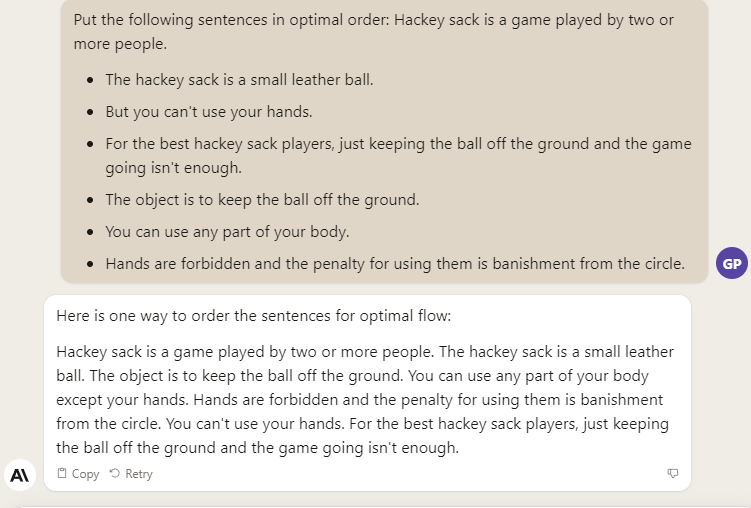

- put the sentences in the following paragraph in optimal order

example

- highlight the topic sentence of each paragraph

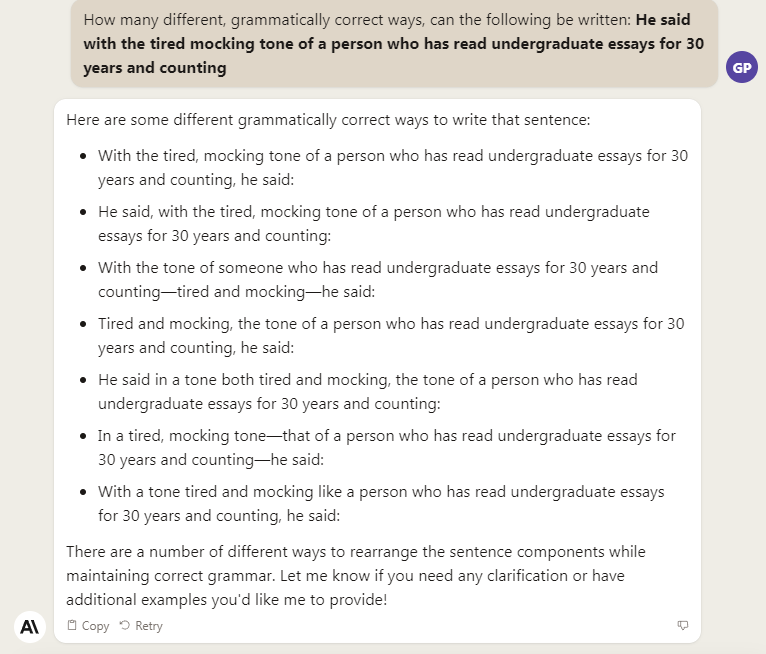

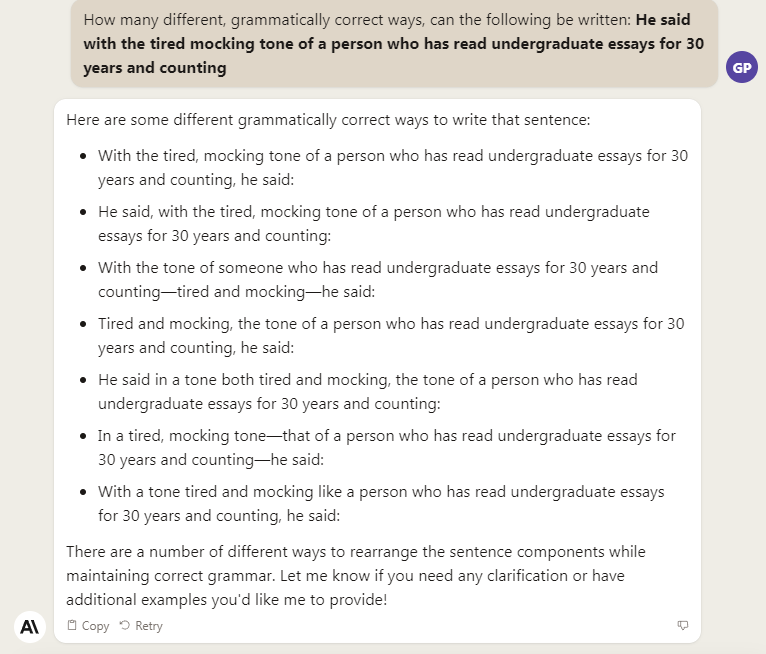

- ask it how many different ways the same sentence might be phrased

example

- ask it to check grammar and punctation

example

- ask it to format a rough bibliography for a specific style guide

- ask it for the best editor prompts

example

As the example for item 9 indicates, you might consider telling it what kind of editor you need it to be -- audience, genre, length, level of development (does it notice any arguments missing or problematic assumptions) -- as well as specific faults you want it to identify -- grammar, punctation, whatever. And then tweak your request based on what feedback the AI returns. You might ask it for a bullet form list of the issues it has identified so you can go back over your text to see what it missed. Experimentation is key here. Try different approaches, the same approach on differing kinds of writing, a one shot versus an iterative approach, and whatever else you can think of to learn how to make the best use of these tools.

How to use AI as a writing tutor

The idea here is to find a prompt or a series of prompts that would help a writer improve an already fully drafted piece without "improving" it for them: AI as tutor rather than ghost writer. Alternatively, you could, as a composition instructor, develop a prompt plan and use it to semi-automate giving your students feedback. They turn in a draft. You run it through your protocol. AI marks up their draft. You check it over and if all is right, send the marked up version back to the student. You might be able to go through several iterations before grading, resulting in better grades for them and less tedious work of debatable utility for you. Editing student writing has never been proven to help them write the next piece better. If the machine could show a budding writer one or two items at a time how better to phrase something or what a given transition signals or how to say the same thing with fewer words, then that student might start to need less feedback over time. But not all students are budding writers and many will accept AI advice without reflection and learn nothing.

- Acting as a writing instructor, provide feedback on the following essay. Identify by putting in bold sentences that could be more efficiently written, words that aren't right, ideas that need elaboration, anything else I should revise. Please indicate any grammatical or punctuation errors using italics.

You might also consider taking examples from drafts your students have given you -- a week or at least a few days before due date -- and in class ask one or more of the AIs to identify the problem or problems you noticed in each example. That way you would model for your students the AI prompting process you want them to follow before turning anything in to you.

Claude as editor

Claude as a grammar checker

ChatGPT as a grammar checker

How to use AI to grade papers

I haven't verified this hunch yet, but I bet if you gave Claude or ChatGPT a precise rubric and asked it to use it to grade and provide feedback on a student paper, it could do a great job. You might need a few iterations. But I bet it can. Would you want it to? Presumably you would review each assessment and commentary before passing it along to the student.

How to use AI as a facilitator of conversations about traditional rhetorical practices

- In class, generate a brief essay with input from students on what the subject and audience should be, and then critique what comes back.

- consider implicit or overt bias in presentation

- assumptions

- missing perspectives or lack of perspective

- then rerun prompt requesting a different perspective and discuss how the output differs

- discuss the default tone and style

- then ask the AI what kinds of styles in can write in, requestion it rewrite in that style, and then discuss the different output with students

- in class, have the machine generate the same content for different audiences and discuss the choices it made

- provide students with 3 pieces of writing, one of which you AI generated and ask them to differentiate and explain how they came to their conclusion, in groups or individually

- as a group, identify qualities that sound robotic

- have students discuss how they feel about communicating with and through robots.

- discuss and have students write about the political and social implications of AI text generators.

Speculations about AIs' cognitive and social implications

This is a very partial list of questions that we, teachers and students, ought to be answering for ourselves and sharing with each other about the impact AI will have on our intellectual lives. If you are concerned about the social implications in particular, you might want to read Atlas of AI, Kate Crawford.

- Will AI reduce the number of writerly jobs available?

- Will AI automate the production of most of what we read in our daily lives, reviews, recommendations, instructions, basic explanations, that kind of thing?

- Will AI eliminate differences in voice -- homogenization?

- Will it level the playing field of communication for people whose first language is other than the target language or who didn't grow up with the cultural capital that speaking in complete, grammatically accurate sentences instills and therefore makes writing academic prose more "natural"?

- Will AI re-enforce the prejudices implicit in the writing it is trained on?

- Will reading AI generated prose on a regular basis change the way humans think about reading and writing at an existential level; will it dehumanize reading and writing?

- Would you want to read formally sophisticated but AI generated poetry or fiction?

- If AIs are increasingly trained on their own writing, will they degenerate the way a species degenerates from inbreeding?

- How will AI change composition -- or any other kind of -- instruction?

Make a point of testing AIs' limits

The more specialized the knowledge-domain, the less useful the output. These generally available AI tools were not trained on highly specialized content. This is why they can't write a real academic article and only a bland facsimile of a college essay. If there were an AI trained on an academic corpus, say all of College English and MLA and a few others, then it might be able to say more detailed things, but it still couldn't generate new knowledge or provide insights other than something latent in the corpus but until then unnoticed by a human being.

Think of a prompt you suspect will stump or mislead an AI generator and see how it handles the situation. Does the failure identify a limitation of the machine or does it suggest the prompt was imperfectly phrased?

I doubt, for example, that Claude would understand if I asked a question ironically, but does it understand what irony is? I was reading a chapter of a dissertation about identity performance and con-artistry when it occurred to me that an apt title for the chapter might be Fake it Until You Make It. And then I thought, a good rhetorical twist would be Fake it Until you Get Caught, because it would subvert the obvious with something more apt. Given that AI generators fill in blanks with what most probably comes next, and given the ubiquity of "fake it until you make it", I wondered how it would fill in that

______

A decent gloss. Then I asked it for an ironic completion to fake it until

_____

Clever, machine. I can forgive it for assuming Alanis Morrissett's definition of irony -- an unexpected reversal of fortune. Only a poorly trained historian of rhetoric would insist on the original definition, clearly meaning the opposite of what you say. I doubt Claude or ChatGPT or any of them could get such high-context jokes. Perhaps given enough context it could seem to infer you were being ironic, but generally computers are hyper-literal. There are people, perhaps, who would be willing to spend time crafting facsimiles of human interaction, and I'm certain there are many trolls itching for a chance to fool the machine into saying inappropriate or irrelevant things, but both of those strike me as human rather than machine failures. In 2016, Microsoft created a chatbot they called Tay, Thinking About You. They gave it a Twitter identity -- "The AI with zero chill." They gave it a Twitter handle, @TayandYou, and then they gave it to Twitter. People would respond to Tay's Tweets and Tay would use that information to Tweet a-new. Sixteen hours later Microsoft had to shut Tay down because it was spewing racist, sexist vitriol. The Humans of Twitter trained it to be a Troll after their own image. A similar thing happened to one of Meta's AIs in December 2022. The Galactica language model was written to "store, combine, and reason about scientific knowledge" (Edwards). According to Edwards, Galactic included "48 million papers, texts and lecture notes, scientific websites, and encyclopedias." The goal was to facilitate and accelerate the composition of literature reviews, Wiki articles, and the like. When Meta offered it to the world to beta test, some people found it promising but others found it problematic and some set out to vividly demonstrate its problems by feeding it prompts that led it to articulate nonsense, in some cases offensive nonsense, as though it were facts. Yann LeCun announced the off-lining of Galactica with a Tweet, "Galactica demo is off line for now. It's no longer possible to have some fun by casually misusing it. Happy?" (link), suggesting malicious human interaction rather than AI defects led to the problems Galactica exhibited.

A more general version of the same experiment is simply to ask it questions you know the answer to. How does it do? What did it get right and what did it get wrong? You might want to rephrase a prompt and ask again to ensure the failure wasn't the prompt's fault.

People have been recognizing that these AI generators make excellent study guides and I concur. When I've asked them to write code for me, they not only provide the code, they explain it as well. This can be a very direct way of learning something. You can also ask it to give you a step by step process to achieve a learning objective (or any goal, really) and what you get back might be a great way to get started. If you try this experiment a few times, I think you will discover that the less you know, the more satisfied you will be with the advice. In other words, these AIs are generalizers. You can ask them to act as a specific kind of expert and you will get less generic results, but the more you know the more gaps you may notice. Just because it made sense doesn't mean it got it right.

Other experiments

Claude can receive TXT, PDF, and CVS files (up to five at a time), read them, and perform various tasks. I uploaded an academic article and asked it to create a five item multiple choice test. I knew as I hit submit that my request was vague and hopelessly optimistic. The result

Given that information, it might well be possible to upload a more lecture-like text, accompany that with a rubric and a set of important topics, and you might get a multiple choice test you could tweak, saving, perhaps, some considerable time. Or not, but worth playing around with, I think.

The fact that it can read CVS files suggests it can analyze data, which means that it might be able to offer an outline or perhaps even a kind of rough draft based on data and some specific parameters about discipline and audience and reading-comprehension level and so on. Since uploading files to an AI gives those files to the AI, one would need to think carefully before doing something like that. But an experiment or two with safe data might be very instructive. If your prompt isn't specific enough, you will be prompted for more information, and if you go through that process a few times, you may get what you want.

I asked it to summarize the content it would find at a URL I gave it. The result

The warning was amusing and the content bland and irrelevant, but hallucination seems a bit melodramatic. Still, ask a human being a question they can't know the answer to and more often than not they will make up some nonsense on the spot, often with conviction. I think psychologists call that phenomenon fabulation. That the machine fails in such an all too human way is more impressive than it would be if it merely acknowledged its ignorance. Such reticence would be better than human.

At any rate,

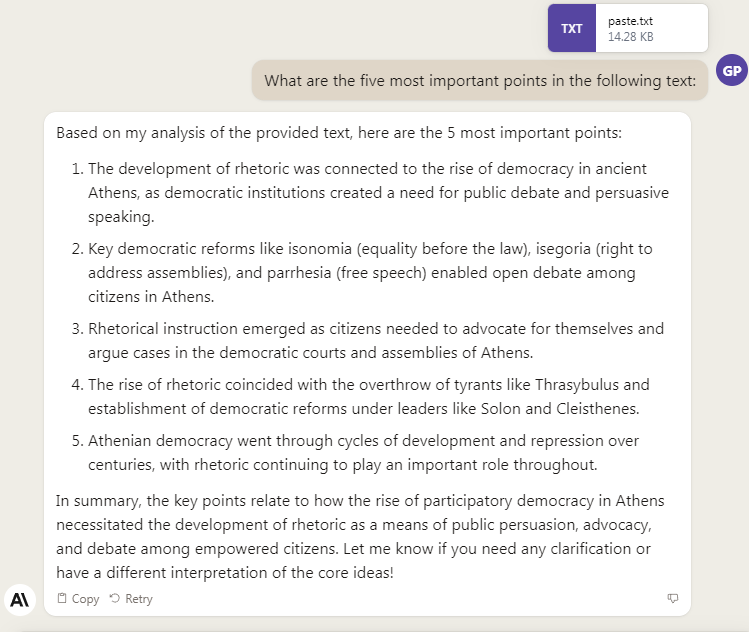

I copied the content from the same URL (which it automatically turned into a TXT file when I typed cntrl v) and asked it to identify the 5 key points.

The result

Not bad at all.

In conclusion

He said with the tired, mocking tone of a person who has read undergraduate essays for 30 years and counting.

And then channeling Erasmus' De copia:

The bottom line -- I should ask an AI to offer alternatives to that cliché -- the bottom line is that if properly prompted AI text generators can provide helpful feedback and potentially useful insights into what you are trying to write and with whom you are trying to communicate. They can also act as very effective editors, given the right prompts. While we could ask them to write mundane texts for us, they still need careful supervision. They are too slickly rhetorical (without being intentionally deceptive) to be given direct access to public spaces. Send an AI-generated note of condolence and you will offend the universe. Maybe in the not too distant future we will be inured to robotic communications. Perhaps we will get more adept at prompting human-sounding texts from our AI-assistants. And the machines themselves may evolve a more personal style. Given the competition, I suspect we humans will start writing in a more distinctly personal voice, to display our humanity, eloquence over mere competence. Or maybe we will just off-load all bureaucratic discourse to the machines so we can focus on less transactional forms of writing.